Generative AI has become a cornerstone of modern business strategy, enabling automation, personalization, and more. Whether it's for customer service, content creation, or software development, the need for scalable, efficient AI tools is critical.

Why Generative AI Is Critical for Today’s Businesses?

Generative AI models, like those powering Amazon Bedrock, Azure OpenAI, and Google Vertex AI, offer unprecedented capabilities in language understanding, image generation, and code creation. They help businesses:

- Improve productivity by automating tasks.

- Personalize customer interactions at scale.

- Enhance decision-making through data-driven insights.

By leveraging AI, companies can enhance customer experiences, reduce operational costs, and innovate faster.

How Amazon, Microsoft, and Google Are Shaping the Future of AI?

These three giants—Amazon, Microsoft, and Google—have been laying the foundation for AI’s future with powerful, cloud-based AI solutions. Each brings unique offerings, built on their cloud services, making them pivotal in the AI race:

- Amazon offers scalable AI services through AWS and Bedrock, providing businesses access to powerful AI models with seamless integration into the AWS cloud ecosystem, ideal for enterprises seeking flexibility and reliability.

- Microsoft integrates AI into its Azure ecosystem with OpenAI, leveraging its enterprise strength.

- Google brings its AI expertise with Vertex AI, backed by its leadership in search, language, and cloud computing.

This blog will guide you through these platforms, Amazon Bedrock, Azure OpenAI, and Google Vertex AI—by comparing them on key factors: their models, pricing, integrations, and security features. The goal is to help you choose the right platform based on your business’s requirements.

Understanding the Platforms

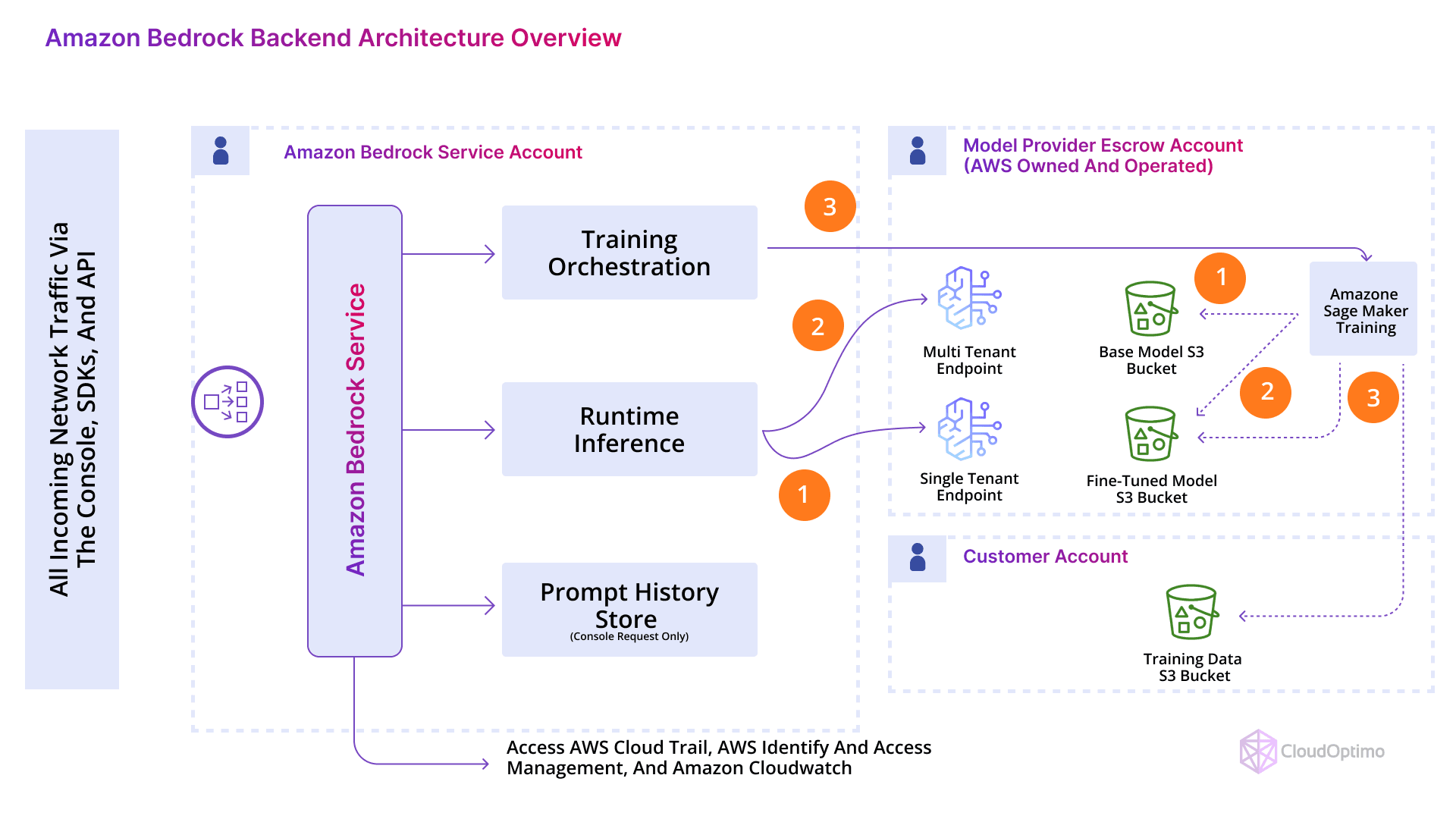

Amazon Bedrock

Source: AWS Community

Amazon Bedrock is a fully managed service by AWS, designed to simplify the deployment and scaling of generative AI applications. It provides access to a wide range of foundation models, such as GPT, Stable Diffusion, and more, allowing businesses to build AI solutions without worrying about managing infrastructure. This platform is tailored for enterprises already using AWS, providing seamless integration with the AWS ecosystem, which makes it ideal for large-scale AI applications.

History & Purpose:

- Launched as part of Amazon Web Services (AWS) to provide businesses with easy access to advanced AI models.

- Focus on enterprises and scalability, enabling developers to focus on building applications rather than managing infrastructure.

- Part of the AWS ecosystem, leveraging Amazon's extensive cloud infrastructure to support powerful, flexible AI deployments.

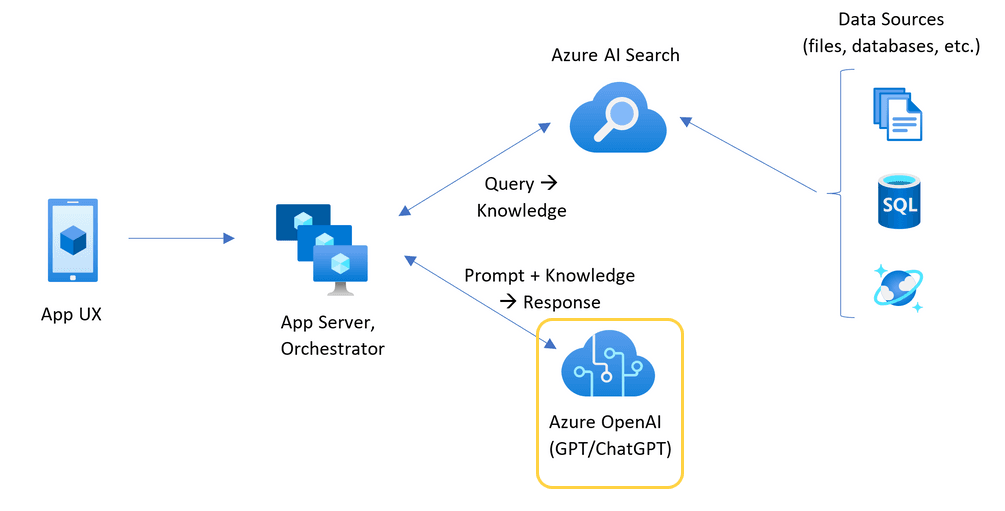

Azure OpenAI

Source - Azure

Azure OpenAI marks Microsoft’s entry into the world of generative AI, building a bridge between OpenAI’s cutting-edge models and the robust infrastructure of Azure. Launched in 2021, this service integrates seamlessly with Microsoft’s suite of products and cloud services, providing access to state-of-the-art models such as GPT-3 and GPT-4, DALL·E, and Whisper.

History & Purpose:

- Launched in collaboration with OpenAI in 2021, bringing OpenAI’s models to the Azure cloud ecosystem.

- Focus on offering AI solutions that integrate deeply with Microsoft’s other services, from Microsoft 365 to Azure’s security and compliance tools.

- Known for its enterprise-grade infrastructure, offering seamless integration with Microsoft’s ecosystem and providing companies with secure, scalable AI solutions.

Google Vertex AI

Google Vertex AI is a unified platform designed to help businesses develop and deploy machine learning models. Backed by Google Cloud, Vertex AI emphasizes the seamless automation of ML workflows, making it easy for businesses of all sizes to leverage Google’s advanced AI research and cloud infrastructure.

History & Purpose:

- Launched as part of Google Cloud to help businesses build and deploy AI models at scale.

- Focus on both developers and non-developers, offering a variety of tools to cater to a range of technical expertise.

- Powered by Google’s AI expertise, it leverages its deep learning frameworks, big data processing, and AI model training capabilities.

These platforms share a common goal: making advanced AI accessible, scalable, and production-ready for businesses of all sizes.

Questions to Ask Yourself Before You Choose

- Which cloud provider does my organization already use?

Migration friction is real. If you're already deep into AWS, Azure, or GCP, that ecosystem matters. - What kind of models do I need?

Are you building a chatbot, code-generation assistant, analytics engine, or multimodal application? - How important is pricing and cost transparency?

Are you running high-scale workloads that demand precise pricing or just experimenting at low volume? - Do I need fine-tuning or just high-quality out-of-the-box performance?

Some platforms are stronger on plug-and-play, others are made for model customization. - Who will use the platform — developers, analysts, or product teams?

Consider how developer-friendly or business-accessible the tools are. Azure and Google shine here with intuitive UIs.

Similarities Across the Platforms

Despite their differences, there are commonalities:

- Cloud-Based: All three platforms are built on top of major cloud services (AWS, Azure, and Google Cloud).

- Generative AI Models: Each platform provides access to powerful models for NLP, image generation, and even code generation.

- Focus on Ease of Use: All platforms aim to simplify AI development for businesses and developers, offering tools that lower the barrier to entry.

Key Differences: What Sets These Platforms Apart

While all three platforms—Amazon Bedrock, Azure OpenAI, and Google Vertex AI—aim to bring advanced AI capabilities to businesses, they differ significantly in architecture, accessibility, and strategic focus. Knowing how they vary across core capabilities is essential for choosing the right fit based on your business's size, goals, and technical readiness.

Let’s break down their key differences across seven critical areas, helping you align platform strengths with your organization’s specific AI strategy.

| Key Difference | Amazon Bedrock | Azure OpenAI | Google Vertex AI |

| AI Model Availability | Multi-provider support (Anthropic, AI21, Cohere, Stability AI) | Direct access to OpenAI’s GPT-4, DALL·E, Whisper | Native access to Google’s Gemini, PaLM, and Model Garden models |

| Pricing Structure | Pay-as-you-go based on model usage from third-party providers | Usage-based pricing with flexible enterprise plans | Modular pricing for model usage, tuning, and MLOps features |

| Integration & Ecosystem Fit | Seamless with AWS tools (SageMaker, Lambda, S3) | Native compatibility with Microsoft 365, Power Platform, Azure DevOps | Tight synergy with BigQuery, AutoML, and the broader Google Cloud stack |

| Security & Compliance | Uses AWS IAM, KMS, and region-specific controls | Built on Azure’s enterprise-grade security (RBAC, data residency, etc.) | Leverages zero-trust model, encryption, and regional data isolation |

| Fine-Tuning Capabilities | RAG support, limited fine-tuning, relies on underlying provider | Offers fine-tuning for GPT models via Azure ML | Full prompt tuning, adapter tuning, and model training options |

| MLOps & Lifecycle Management | Depends on SageMaker for model lifecycle tools | Azure ML Studio provides complete MLOps lifecycle support | Vertex Pipelines, Feature Store, Monitoring, and built-in deployment |

| Multimodal Capabilities | Varies by provider (e.g., Stability AI for images) | Supports vision (GPT-4-V), audio (Whisper), and image generation (DALL·E) | Gemini supports native text, vision, audio, and code workflows |

This table offers a high-level snapshot of how each platform stacks up across critical decision-making categories. With this foundation in place, we’ll now explore each of these areas in detail—starting with AI Model Access & Capabilities, followed by Pricing, Integration, Security, and beyond.

AI Model Access & Capabilities: Which One Has What You Need?

Each cloud provider offers distinct capabilities when it comes to accessing and using foundation models. This includes the number and variety of models available, the ease of integrating them into apps, and the level of developer control for inference, configuration, and scaling.

Amazon Bedrock: Unified Access to Multiple Model Providers

Amazon Bedrock stands out for its multi-vendor model access—delivered via a standardized, serverless API layer that abstracts infrastructure complexity.

Key Capabilities:

- Third-party foundation models include:

- Anthropic (Claude 2.1, Claude Instant)

- AI21 Labs (Jurassic-2 Mid, Ultra)

- Cohere (Command family)

- Stability AI (Stable Diffusion)

- Amazon’s Titan models (Titan Text and Titan Embeddings)

- Standardized API call structure, meaning you can swap models without rewriting your code.

- No provisioning or containerization is required. Models are serverless and auto-scale behind the scenes.

Use Cases Suited For:

- Businesses compare outputs across vendors before committing.

- Applications that need vendor redundancy or fallback options.

- Teams looking to reduce infrastructure complexity during experimentation.

Azure OpenAI: Direct Gateway to OpenAI’s Proprietary Models

Azure OpenAI gives you exclusive enterprise access to OpenAI’s production-ready models, fully hosted within Microsoft’s secure cloud ecosystem.

Key Capabilities:

- Direct access to:

- GPT-3.5 Turbo

- GPT-4 and GPT-4 Turbo

- Codex (for code generation)

- Whisper (speech-to-text, where enabled)

- Access is delivered via Azure’s endpoint deployments, ensuring consistency with Azure’s governance and compliance architecture.

- You can scale model endpoints regionally, isolate traffic, and manage quotas easily through Azure’s unified cloud management interface.

Use Cases Suited For:

- Companies standardizing on GPT-4 for core workflows.

- Enterprises needing compliance-grade AI deployment in regulated industries.

- Applications demanding predictable performance with a consistent model backend.

Google Vertex AI: Deep Model Coverage + Open Ecosystem

Vertex AI offers the most diverse and open-access model among the three, combining Google-native foundation models with a large library of open-source and partner models.

Key Capabilities:

- Access to Google’s own models like:

- Gemini 1.5 Pro

- PaLM 2 (Text-Bison, Chat-Bison)

- Integration with Model Garden, offering:

- Over 100 models, including T5, LLaMA, BERT, Gemma, and others.

- Supports multi-model workflows, including hybrid use of proprietary and OSS models within the same ML pipeline.

- Access is either prebuilt (fully managed endpoints) or custom-deployed in your own Vertex-hosted environment.

Use Cases Suited For:

- Teams that want the flexibility to explore open-source models alongside proprietary ones.

- Research and experimentation-focused orgs that require broad model access.

- Use cases needing both classic ML models and GenAI in the same environment.

| Feature | Amazon Bedrock | Azure OpenAI | Google Vertex AI |

| Model Access Type | Multi-vendor (Anthropic, AI21, Cohere, Titan, etc.) | OpenAI only (GPT-4, GPT-3.5, Codex) | Google + OSS via Model Garden (Gemini, PaLM, T5, etc.) |

| Execution Mode | Fully serverless, API-based | Azure-hosted, provisioned endpoints | Managed or user-deployed endpoints |

| Multi-Model Switching | Seamless via common API layer | Not applicable (single model family) | Flexible—switch between open-source, 3rd-party, Google-native |

| Custom Model Hosting | Not supported | Not applicable | Fully supported |

| Primary Focus | Model variety & ease of use | Consistent GPT access at scale | Flexibility, experimentation, and hybrid ML pipelines |

| Ideal For | Testing multiple vendors quickly | GPT-focused enterprise workloads | Broad experimentation, OSS model fans, and full ML lifecycle |

Pricing & Cost Transparency

When it comes to adopting AI platforms, pricing transparency and predictability are critical—especially at scale. Each platform structures costs differently: by model, usage, infrastructure, or feature usage.

Let’s break down actual pricing examples and compare apples to apples on token costs, tuning, and deployment.

Amazon Bedrock: Pay-Per-Model Pricing (Varies by Vendor)

Amazon Bedrock provides access to models from several third-party vendors, and each vendor sets their pricing. The pricing is typically based on token usage (input + output) in a pay-as-you-go model. You are billed for every 1,000 tokens processed.

Pricing Breakdown (as of 2024):

- Claude Instant 1.2:

- Input: $0.0008 per 1K tokens

- Output: $0.0024 per 1K tokens

- Claude 2.1:

- Input: $0.0080 per 1K tokens

- Output: $0.0240 per 1K tokens

- AI21 Jurassic-2 Mid:

- Input: $0.0125 per 1K tokens

- Output: $0.0125 per 1K tokens

- Titan Text Express:

- Input: $0.0004 per 1K tokens

- Output: $0.0016 per 1K tokens

Billing Model:

- No infrastructure costs; you only pay for the tokens you use.

- Token-based pricing means you’ll pay for both input and output tokens, so efficient prompt management is key to minimizing costs.

Azure OpenAI: Transparent Token-Based Pricing + SLAs

Azure OpenAI utilizes OpenAI’s standard pricing and provides additional flexibility through Azure’s infrastructure. This includes dedicated resource allocation, regional hosting, and more granular control over usage via Azure’s cost management tools.

Pricing Breakdown (as of Q1 2024):

- GPT-3.5 Turbo:

- Prompt: $0.0015 per 1K tokens

- Completion: $0.0020 per 1K tokens

- GPT-4 Turbo:

- Prompt: $0.01 per 1K tokens

- Completion: $0.03 per 1K tokens

- DALL·E Image Generation:

- ~$0.02 per image (pricing is image-based, not token-based)

- Fine-Tuning (GPT-3.5):

- Training: $0.03 per 1K tokens

- Fine-tuning cost: ~$0.008 per 1K tokens (for fine-tuning models like GPT-3.5)

Billing Model:

- Unified billing with other Azure cloud services, making it easier to manage AI expenses alongside other business operations.

- Reserved capacity and usage-based pricing: You can opt for reserved instances or scale as needed, with discounts at scale.

- Volume discounts are available for enterprises using significant amounts of tokens or requiring dedicated resources.

- Azure-native SLAs ensure enterprise-level reliability with uptime guarantees and redundancy.

Google Vertex AI: Modular Pricing with Training, Tuning, and Inference

Google Vertex AI takes a modular approach to pricing, with different cost categories for each stage of the machine learning lifecycle: training, tuning, and inference. You are billed for token usage, compute resources (CPU, GPU), and endpoint uptime.

Pricing Breakdown (as of 2024):

- Gemini 1.5 Pro:

- Token Cost: $0.005 – $0.01 per 1K tokens (depending on usage)

- Endpoint Cost: $0.10 per hour

- Tuning Cost: $1.20 per hour (vCPU) + $2 per hour (GPU) (for fine-tuning)

- PaLM 2:

- Token Cost: $0.003 – $0.005 per 1K tokens

- Endpoint Cost: $0.05 per hour

- Tuning Cost: $1.20 per hour (vCPU) + $2 per hour (GPU) (similar to Gemini)

Billing Model:

- You are billed for:

- Token usage (input and output tokens processed during inference).

- Compute resources used during model training, fine-tuning, and inference (e.g., CPUs, GPUs).

- Endpoint uptime, so you will incur costs based on how long your model is deployed for inference.

- Granular pricing for each aspect of model deployment and experimentation allows for detailed tracking and cost control.

- Google Cloud Billing offers advanced analytics tools, making it easier to keep track of costs at a granular level.

| Platform | Token Pricing | Fine-Tuning Pricing | Endpoint / Uptime Costs | Additional Notes |

| Amazon Bedrock | $0.0004 – $0.0125 per 1K tokens (varies by model) | Not supported | No infrastructure cost (token-based) | Pay-as-you-go model per vendor, requires monitoring costs |

| Azure OpenAI | $0.0015 – $0.03 per 1K tokens (GPT-4 Turbo) | $0.03 per 1K tokens (fine-tuning) | $0.05 – $0.10 per hour (deployment) | Unified billing with Azure, reserved and volume discounts |

| Google Vertex AI | $0.003 – $0.01 per 1K tokens (Gemini, PaLM) | $1.20 per hour (vCPU) + $2 per hour (GPU) | $0.05 – $0.10 per hour (deployment) | Granular cost model (compute, tokens, endpoints) |

Integration: Fitting AI into Your Existing Systems

Choosing an AI platform isn’t just about model quality—it’s also about how well it fits into your existing cloud, data, and application stack. This section compares how Bedrock, Azure OpenAI, and Vertex AI integrate with their respective ecosystems, offering plug-and-play value or deep customization depending on your cloud environment.

Amazon Bedrock: Native Fit Within the AWS Ecosystem

Bedrock is designed to work seamlessly with existing AWS services, providing a frictionless path to embed generative AI into real-time apps, backend systems, and data pipelines.

Integration Strengths:

- AWS Lambda: Easily trigger GenAI responses from serverless functions.

- Amazon SageMaker: Use Bedrock within custom ML workflows and pipelines.

- Amazon API Gateway + Step Functions: Ideal for orchestrating multi-step AI workflows.

- Data Connectors: Smooth integration with Amazon S3, DynamoDB, and Aurora for data-rich applications.

Best For:

- Organizations fully built on AWS.

- Teams looking for no-fuss integration with AWS-native storage, compute, and orchestration tools.

- Rapid prototyping using AWS serverless service

Azure OpenAI: Deep Integration with Microsoft Apps & Stack

Microsoft’s big advantage? Familiarity. Azure OpenAI plugs directly into your Microsoft environment, giving you a frictionless way to supercharge existing tools like Microsoft 365, Power Platform, and Dynamics 365 with large language models.

Integration Strengths:

- Power Automate + Power Apps: Use GPT via connectors in low-code workflows.

- Microsoft 365 Copilot Architecture: Integrates with Word, Excel, Teams using the same backend.

- Azure Data Services: Tight integration with Azure Synapse, Azure Data Lake, and Azure SQL for AI-enhanced data flows.

- API Access: Fully aligned with Azure Resource Manager, RBAC, and Azure Monitor.

Best For:

- Microsoft-centric businesses and enterprises already using 365.

- Use cases where AI needs to live inside business productivity apps.

- Firms need low-code AI access for business users.

Google Vertex AI: AI-Native Synergy with Google Cloud Tools

Vertex AI is built from the ground up to fit tightly within Google Cloud’s data-first, ML-heavy ecosystem, enabling highly customized pipelines and analytics-focused use cases.

Integration Strengths:

- BigQuery ML + GenAI: Use SQL to call models directly from BigQuery tables.

- AutoML & Vertex Pipelines: Combine classic ML and GenAI in production pipelines.

- GCS + Pub/Sub + Dataflow: High-throughput data pipelines built with native GenAI endpoints.

- Colab & Vertex Workbench: Smooth transition from notebooks to production pipelines.

Best For:

- Teams heavily invested in analytics, data engineering, or open-source tooling.

- Projects needing fine-grained control over data flow + model orchestration.

- ML teams requiring custom MLOps and hybrid AI workflows.

Security, Privacy & Compliance: Keeping Your Data Safe

Security and compliance are non-negotiable for enterprise adoption of generative AI. This section explores the unique controls, certifications, and architectural strengths of each platform when it comes to identity, encryption, data residency, and governance.

Amazon Bedrock: Enterprise-Grade Controls from AWS

As part of the AWS ecosystem, Bedrock inherits robust security controls and enterprise readiness by default.

Key Features:

- IAM Integration: Uses AWS Identity and Access Management to enforce role-based permissions.

- KMS Encryption: All data in transit and at rest is encrypted using AWS Key Management Service.

- Private VPC Access: Supports deployment within isolated cloud environments for sensitive use cases.

- No Training on User Prompts: Models don’t retain or learn from customer input.

Compliance:

- ISO 27001, SOC 1/2/3, HIPAA, GDPR, FedRAMP (moderate) compliant.

Documentation Links:

Note: Refer to the above links for official AWS documentation covering Amazon Bedrock’s security architecture and regulatory compliance certifications.

Azure OpenAI: Enterprise-Level Governance via Microsoft Azure

Azure OpenAI is hosted within Microsoft’s highly compliant infrastructure, offering confidence to enterprises in regulated industries.

Key Features:

- Azure RBAC: Role-based access control for managing who can invoke or deploy models.

- Customer Lockbox: Optional service requiring customer approval before Microsoft accesses your data.

- Private Networking: Isolate model endpoints within your own Azure Virtual Network (VNet).

- Data Handling Transparency: Customer prompts and responses are not stored or used for training.

Compliance:

- HIPAA, GDPR, ISO 27001, SOC 1/2/3, FedRAMP High, HITRUST, and more.

Documentation Links:

- Azure OpenAI Compliance Documentation

- Azure OpenAI Security Baseline

- Azure AI Services Security Controls Policy

Note: Refer to the above links for complete details on Azure OpenAI’s compliance certifications, access policies, and governance framework.

Google Vertex AI: Fine-Grained Control with a Zero-Trust Philosophy

Vertex AI is designed with Google’s zero-trust cloud architecture, giving customers maximum control over data, region, and access policies.

Key Features:

- IAM + CMEK Support: Role-based access plus full support for Customer-Managed Encryption Keys.

- Resource Location Pinning: Choose specific data residency regions for compliance-sensitive workloads.

- VPC Service Controls: Prevent data exfiltration with service perimeter enforcement.

- Model Isolation: Inference doesn’t retain user data—computation is stateless.

Compliance:

- PCI DSS, ISO 27001/17/18, HIPAA, GDPR, CSA STAR, and more.

Documentation Links:

Note: Refer to the above links for Google Cloud’s official compliance information and Vertex AI’s zero-trust security practices.

| Feature / Control | Amazon Bedrock | Azure OpenAI | Google Vertex AI |

| Access Control | AWS IAM for granular policy-based permissions | Azure RBAC with layered identity access | IAM with fine-grained role assignment |

| Encryption | AWS KMS (Customer-managed or AWS-managed keys) | Azure Key Vault + Customer Lockbox for approval-based access | CMEK support + default encryption at rest and in transit |

| Private Networking Support | Yes — via VPC and endpoint isolation | Yes — deploy models within Azure VNet | Yes — VPC Service Controls with resource perimeter configuration |

| Prompt/Response Data Retention | Not stored or used for model training | Not stored, no training on customer data | No data persistence; stateless inference |

| Region-Specific Deployment | Supported in select AWS regions | Region-scoped deployments through Azure regions | Resource location pinning for regulatory compliance |

| Certifications & Compliance | ISO 27001, SOC 1/2/3, HIPAA, FedRAMP Moderate, GDPR | FedRAMP High, HITRUST, HIPAA, ISO 27001, GDPR, SOC 2 | PCI DSS, ISO 27001/17/18, HIPAA, GDPR, CSA STAR, SOC 2 |

| Security Philosophy | Centralized cloud-native controls | Enterprise-ready with layered visibility and lockbox protection | Zero-trust model with service perimeter enforcement |

Fine-Tuning & Customization

Fine-tuning is the key to making foundation models context-aware and behaviorally aligned with specific industries, tone, or tasks. Whether you want an assistant to mimic your brand voice or optimize results for legal, healthcare, or retail-specific terminology — fine-tuning bridges the gap between general intelligence and targeted performance.

Each platform approaches fine-tuning differently in terms of depth, control, and ease of use.

Amazon Bedrock: External Fine-Tuning Pipeline via SageMaker

Amazon Bedrock itself does not support in-place fine-tuning. Instead, AWS emphasizes retrieval-augmented generation (RAG) and external fine-tuning through SageMaker for any weight-level customization:

- SageMaker JumpStart supports tuning open-source models (e.g., Falcon, LLaMA, Mistral).

- Supports parameter-efficient techniques: LoRA, QLoRA, adapters.

- Full control over training parameters, epochs, evaluation datasets.

- Requires managing compute infrastructure, training jobs, and model hosting separately.

No direct fine-tuning for third-party foundation models (Anthropic, AI21, etc.) offered inside Bedrock.

Azure OpenAI: Streamlined GPT-3.5 Hosted Fine-Tuning

Azure offers direct fine-tuning support for GPT-3.5 models, hosted securely inside Azure infrastructure:

- Training input format: prompt-completion JSONL.

- Can create custom model variants with unique IDs.

- Trained models retain the same API interface as base GPT-3.5.

- No access to GPT-4 tuning (as of early 2025), though expected to arrive soon.

No code tuning pipelines available, but DevOps teams can script fine-tuning via Azure CLI or REST APIs.

Google Vertex AI: Full-Stack Fine-Tuning with PaLM/Gemini

Google Vertex AI provides the broadest fine-tuning suite, offering both lightweight and deep model customization:

- Prompt tuning: minimal effort, fast results, no retraining needed.

- Adapter tuning: efficient fine-tuning using LoRA, prefix-tuning, PEFT techniques.

- Full model retraining:

- Available for PaLM and Gemini models.

- Uses Vertex AI Training pipelines with TPUs, GPUs.

- Highly customizable: control batch size, learning rate schedulers, weight decay, etc.

- Option to integrate with Vertex Feature Store for structured input pipelines.

Supports managed pipelines or custom Docker training containers.

MLOps: Model Deployment, Monitoring, and Scaling

Effective MLOps (Machine Learning Operations) is crucial for managing machine learning models once they're deployed. This includes scaling models in production, continuous monitoring for performance, tracking model versions, detecting data drift, and ensuring a seamless, automated lifecycle.

Each platform approaches MLOps in its own way, with different tools and services available for different use cases, from enterprise-scale pipelines to lightweight applications.

Amazon Bedrock: Leveraging SageMaker for MLOps

Amazon Bedrock doesn't natively include extensive MLOps tools but integrates seamlessly with Amazon SageMaker for end-to-end machine learning operations. For teams already embedded in the AWS ecosystem, this integration offers a powerful pipeline and model management workflow. However, Bedrock itself focuses on providing the foundational models, and all MLOps functionalities are delegated to SageMaker.

MLOps Features via SageMaker:

- SageMaker Pipelines: Automates the end-to-end ML workflow, including data processing, model training, and deployment.

- SageMaker Model Registry: Centralized storage for managing model versions, approvals, and model lifecycle states.

- Model Monitoring: Automatically monitors models in production for data drift, concept drift, and performance degradation.

- SageMaker Clarify: Adds fairness and explainability to deployed models, useful for compliance in regulated industries.

- Model Debugger: Analyzes models in training, detecting issues like overfitting, bias, or resource inefficiency.

- CI/CD with SageMaker: Automate deployment workflows with AWS CodePipeline, managing model updates and rollbacks.

The SageMaker ecosystem is rich, offering automated scaling, monitoring, and governance, but Bedrock’s role remains largely focused on providing access to foundational models rather than facilitating end-to-end workflows directly.

Azure OpenAI: Seamless MLOps with Azure ML Studio

Unlike Amazon Bedrock, Azure OpenAI benefits from tight integration with Azure ML Studio, a robust platform that provides a unified environment for managing and deploying machine learning models. This allows teams to manage the entire lifecycle, from model training to deployment, monitoring, and scaling, all within Azure’s cloud ecosystem.

MLOps Features via Azure ML:

- Azure Machine Learning Studio: A low-code environment for managing machine learning workflows, including model training, deployment, monitoring, and version control.

- Model Registry: Facilitates the tracking of different versions of the GPT models, including custom fine-tuned versions, which can be seamlessly deployed for specific tasks (e.g., customer service chatbots).

- Real-time Monitoring & Alerts: Azure provides detailed analytics on model usage and performance metrics, alerting you when models begin to drift or perform suboptimally.

- Automated Deployment: With Azure ML, deployment becomes a streamlined process. You can use A/B testing and blue/green deployments to reduce risk during updates and ensure reliability in production.

- Responsible AI: A suite of tools, including the Responsible AI dashboard, provides insight into model fairness, performance biases, and compliance with ethical standards.

- CI/CD Integration: Azure supports integration with Azure DevOps, GitHub Actions, and Docker containers to create automated deployment pipelines for your models.

The focus on Azure’s collaborative development environment and the ease of managing AI models within Azure’s ecosystem makes it a compelling choice for businesses looking for an all-in-one MLOps solution.

Google Vertex AI: End-to-End MLOps with Advanced Customization

Google Vertex AI is built for end-to-end machine learning operations, offering a complete, holistic view of the MLOps pipeline. Vertex AI integrates with the Google Cloud ecosystem, offering tools for model building, training, deployment, monitoring, and scaling, all within a unified workflow. What sets Vertex AI apart is its focus on advanced MLOps capabilities and data-centric workflows.

MLOps Features via Vertex AI:

- Vertex Pipelines: A fully managed pipeline service that allows you to create, deploy, and manage custom workflows with tools like Kubeflow or TensorFlow Extended (TFX). Vertex Pipelines integrates CI/CD with GitOps principles to automate model training and deployment.

- Feature Store: A dedicated store to manage features used for training, enabling reproducibility and consistency when models are retrained. It helps avoid data leakage and inconsistencies between training and production models.

- Model Monitoring: Includes built-in features for drift detection, prediction analysis, and feedback loops. Google also provides automated retraining workflows triggered by data or model performance degradation.

- End-to-End Model Management: From model versioning to deployment and scaling, Google provides robust tools that allow users to fully manage their model lifecycle, including monitoring and logging.

- CI/CD and Custom Pipelines: Vertex supports custom Docker containers, Kubernetes-based orchestration, and can trigger automated workflows via Google Cloud Build, Terraform, and Cloud Functions.

- Responsible AI: Google integrates XAI (Explainable AI) tools and automated compliance checks, ensuring that models are transparent, fair, and compliant with regulations such as GDPR or CCPA.

Google’s end-to-end MLOps infrastructure is a perfect fit for organizations aiming for high scalability, advanced model monitoring, and the ability to rapidly iterate and deploy custom solutions.

Multimodal Capabilities: Beyond Just Text

Modern AI doesn’t stop at text—images, audio, and video are becoming part of the generative AI experience. Each platform handles this expansion differently.

Amazon Bedrock: Limited & Provider-Dependent

Multimodal support in Bedrock depends on the third-party models it hosts, and features may vary widely.

What’s Supported:

- Image Generation via Stability AI’s Stable Diffusion.

- Basic Embedding Models (e.g., Cohere) that can handle mixed modalities (e.g., text-image pairing).

- No native support for vision or audio models yet.

Takeaways:

- Suitable for text-first use cases with optional image generation.

- Less ideal for unified multimodal experiences.

Azure OpenAI: Access to OpenAI’s Full Multimodal Stack

Azure supports vision and audio modalities via OpenAI’s latest models like GPT-4 with Vision, DALL·E 3, and Whisper.

What’s Supported:

- Image Interpretation with GPT-4 Vision.

- Image Generation via DALL·E 3 API.

- Speech-to-Text with Whisper for transcription.

Takeaways:

- Best choice for full-stack multimodal development via a single provider.

- Enables apps like smart document readers, voice bots, image captioners.

Google Vertex AI: Native Multimodal from the Ground Up

With Gemini, Google takes a unified approach to multimodal intelligence—no stitching required.

What’s Supported:

- Native multimodal models (text, image, code, audio) under Gemini.

- Document & Image Question Answering with PaLM and Gemini APIs.

- Future support for video, under development via DeepMind integrations.

Takeaways:

- Ideal for fluid cross-modal tasks, like asking questions about a chart or interpreting receipts.

- High potential for next-gen multimodal assistants.

| Capability Category | Amazon Bedrock | Azure OpenAI | Google Vertex AI (Gemini) |

| Modality Coverage | Depends on third-party providers; varies by model | Full-stack multimodal via OpenAI (text, image, audio, code) | Unified modality support through Gemini family (text, image, audio, code) |

| Image Input Support | Not natively supported; relies on models like Stability AI (e.g., SDXL) | Supported via GPT-4 with Vision | Native support via Gemini 1.5 and Model Garden APIs |

| Image Output / Generation | Available via Stability AI integration (Stable Diffusion) | Available via DALL·E 3 | Supported with Gemini APIs and PaLM-based image generation |

| Audio Input (Speech Recognition) | Not available | Whisper (automatic speech-to-text via Azure OpenAI API) | Gemini models support audio input, particularly in Gemini 1.5 Pro |

| Audio Output (Text-to-Speech) | Not offered through Bedrock directly | Available via Azure Cognitive Services, not OpenAI models | Available via complementary Google Cloud services (Text-to-Speech API) |

| Code Understanding (as input) | Limited to capabilities of individual models (e.g., Anthropic Claude) | Supported in GPT-4 (e.g., code snippets, structured reasoning) | Fully supported natively in Gemini (code, syntax, execution awareness) |

| Unified Multimodal Model | No unified model—separate providers offer narrow multimodal features | GPT-4 Vision integrates text/image/audio but as separate services | Gemini 1.5 offers unified multimodal architecture under a single endpoint |

Pros and Cons: The Quick Rundown

Making sense of these platforms often comes down to identifying the strengths and trade-offs.

Here’s a quick but meaningful breakdown:

Amazon Bedrock: The Scalable Multi-Model Powerhouse

Pros:

- Access to multiple models from top providers (Anthropic, AI21, Cohere, Stability AI).

- Deep integration with the AWS ecosystem — great for existing AWS customers.

- Highly scalable and built for production-grade deployments.

Cons:

- Still maturing — lacks the depth of a visual interface or MLOps features compared to SageMaker.

- Smaller third-party ecosystem and community support than Azure or Google.

- Limited in fine-tuning controls (currently relies more on prompt engineering and RAG).

Azure OpenAI: The Enterprise-Ready AI Workbench

Pros:

- Direct access to OpenAI’s GPT models including GPT-4, DALL·E, Whisper, and Codex.

- Seamless integration with Microsoft 365, Power Platform, and Azure DevOps.

- Enterprise-grade compliance and responsible AI tooling built-in.

Cons:

- Pricing can rise quickly if you’re not already within Microsoft’s ecosystem.

- Some limitations around custom training unless leveraging Azure ML tools.

- Limited model diversity compared to Bedrock’s multi-provider approach.

Google Vertex AI: The Full-Stack AI Innovation Engine

Pros:

- Direct access to Gemini and PaLM, including native multimodal capabilities.

- End-to-end MLOps tools: Vertex Pipelines, Feature Store, AutoML, Explainability, and Monitoring.

- Ideal for teams that prioritize data science experimentation and full model customization.

Cons:

- Pricing structure can be complex and harder to predict at scale.

- Less variety in foundation models compared to Bedrock.

- Best suited for teams already using Google Cloud — can have a learning curve for others.

Amazon Bedrock, Azure OpenAI, and Google Vertex AI are not trying to solve the same problem in the same way — and that’s the point. Each platform offers something distinct:

- Amazon Bedrock simplifies access to top models with flexibility and scalability in mind.

- Azure OpenAI prioritizes enterprise AI delivery with strong model access and ecosystem ties.

- Google Vertex AI empowers technical teams to go deep into AI customization and experimentation.

Ultimately, the best platform is not simply the one with the most features — it's the one that aligns with your organization's infrastructure, AI maturity, and business goals. By matching your needs to each platform's unique strengths, you can move beyond experimentation and build AI into the core of your operations with confidence.