A media processing company reduced its monthly AWS bill by $12,000 by switching its image optimization pipeline to Graviton instances. The transition took place in under two weeks. With just a few adjustments, primarily in container builds, the team achieved a 28% reduction in costs and a 15% increase in processing speed.

This is just one of many examples showing how organizations are using AWS Graviton to control cloud costs without sacrificing performance. But results like this don’t happen by chance. They depend on choosing the right workloads and knowing where Graviton offers the biggest return.

Understanding AWS Graviton's Cost Advantage

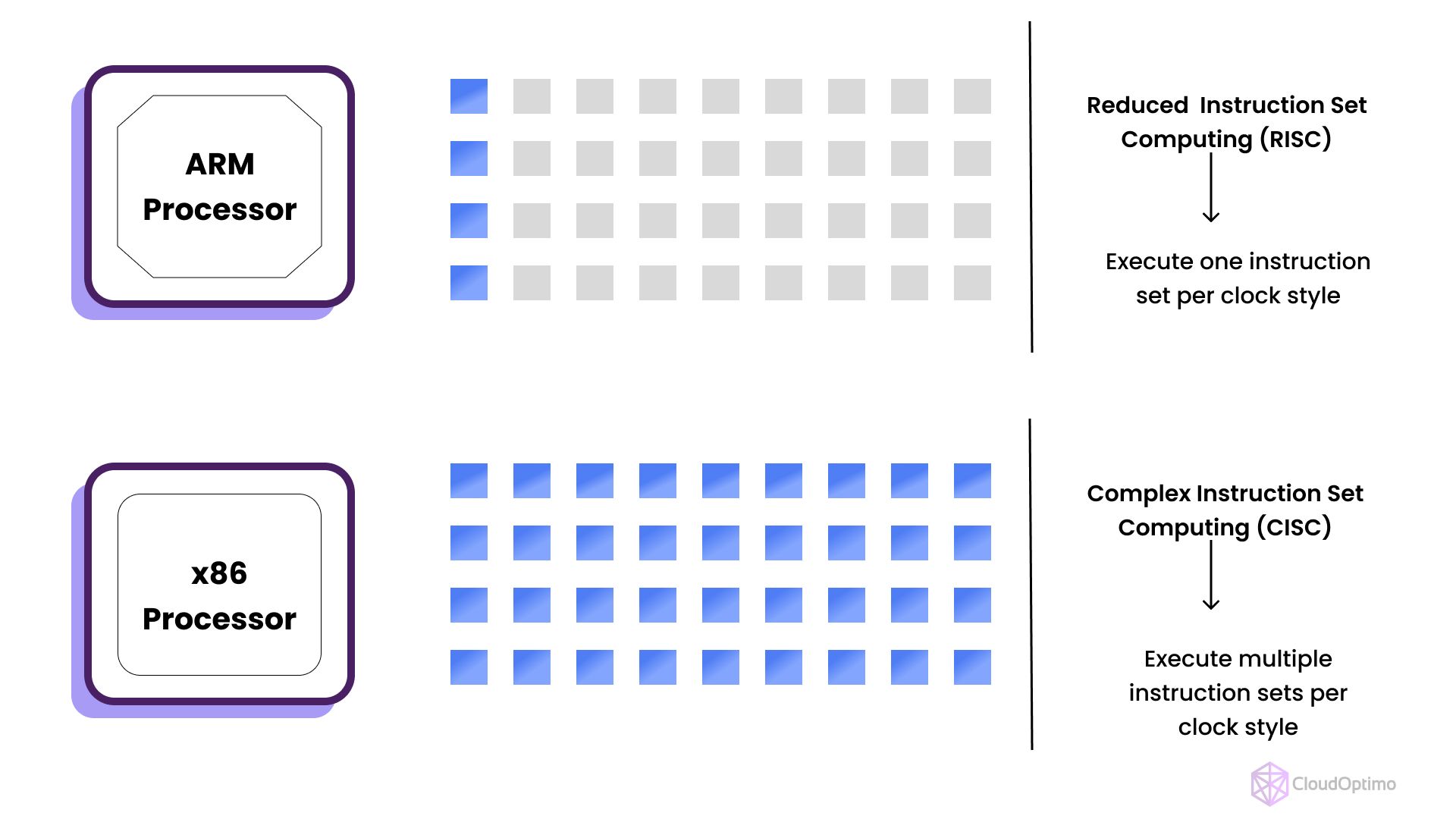

AWS Graviton processors are custom ARM-based chips designed specifically for cloud workloads. Unlike traditional x86 processors, these chips optimize for cloud-specific tasks rather than general computing needs. This specialization creates measurable financial benefits.

- Graviton2 introduced solid general-purpose performance gains

- Graviton3 improved compute efficiency and memory bandwidth

- Graviton4 now offers the highest performance and broadest compatibility yet

Graviton instances typically cost 20-40% less than equivalent Intel or AMD instances while delivering similar or better performance. The cost advantage derives from improved energy efficiency and architectural optimizations that allow AWS to offer more compute capacity at lower prices.

The processor family has evolved significantly. With Graviton4, AWS has removed many of the technical roadblocks that once slowed adoption. The latest generation supports a wide range of applications, integrates seamlessly with EC2, ECS, EKS, Lambda, and RDS, and offers up to 30% better compute performance than the previous generation.

Key Performance-Per-Dollar Advantages vs. Intel/AMD

When comparing Graviton-based instances to x86 alternatives (Intel and AMD), the performance-per-dollar advantage comes from more than just lower pricing. It’s about doing more with less; less cost, less power, and fewer instances needed to hit the same performance target.

Graviton achieves this in three key ways:

- Lower hourly pricing

Graviton instances are typically 10% – 20% cheaper than comparable Intel/AMD instance types. - Higher throughput per vCPU

For many workloads, especially those that are compute- or memory-intensive, Graviton delivers 20% – 40% higher throughput at the same or lower latency. - Improved energy efficiency

Graviton's architecture uses less power per operation, which results in more cores per host and lower cost per compute unit passed on to users.

Real-World Impact

This means that a workload running on 10 x86-based instances might need only 6–8 Graviton instances to deliver the same or better performance. And because the instance itself costs less per hour, the total cost savings can be significant, even before factoring in reduced I/O, storage, or licensing needs.

| Metric | Intel (x86) | AMD (x86) | AWS Graviton (Arm-based) |

| vCPU Architecture | Intel Xeon Platinum 8375C | AMD EPYC 7R13 | AWS Graviton3 (64-bit Arm Neoverse) |

| On-Demand Hourly Cost (US East 1) | $0.09 | $0.08 | $0.07 |

| Price Difference vs. Intel | - | ~9–10% lower | ~18–20% lower |

| SPEC CPU 2017 (Est. Integer Rate) | ~155 | ~170 | ~180 |

| Price-Performance Index | 1.00× | ~1.2× | ~1.4–1.5× |

| Memory Bandwidth (GB/s) | ~60–70 | ~80–90 | ~115–120 |

| Performance per Watt | Baseline (1.0×) | ~1.3× better than Intel | ~1.6–1.8× better than Intel |

Ready to migrate?

In the next section, we look at real-world examples where organizations made the switch and saw real savings.

Modern Application Architecture (Web APIs and Microservices)

Web APIs built with frameworks like Node.js, FastAPI, and Express.js deliver immediate results when migrated to AWS Graviton. These applications are stateless, containerized, and built to handle high concurrency, which aligns with Graviton's architecture.

Microservices running in containers share these same characteristics. Whether deployed via ECS, EKS, or Fargate, containerized services operate in a stateless, distributed environment, making them ideal candidates for Graviton's architecture from both performance and cost perspectives.

Node.js and Express.js capitalize on Graviton's efficient core design and memory performance, particularly in handling asynchronous operations. FastAPI applications, built on Python's async capabilities, experience improved throughput due to better concurrency and cache behavior on ARM.

How Modern Applications Transform on Graviton

In real deployments, teams have reported:

- 20–30% lower compute costs compared to Intel (c6i) or AMD (c6a) instances

- 10–15% faster API response times, even under moderate-to-high traffic

- Up to 25% fewer instances required during peak usage

These gains come from Graviton’s better memory bandwidth, cache efficiency, and energy-optimized design, which collectively reduce both runtime and overhead.

Real-World Example

A SaaS company running 25 microservices on Express.js moved from Intel-based c6i.large to Graviton-based c7g.large. Post-migration analysis revealed $6,800/month in compute cost savings, 13% reduction in average API response times, and 25% fewer instances needed during peak traffic windows.

In another case, a fintech company operating over 100 microservices migrated its ECS services to c7g.medium and c7g.large instances. Services were moved gradually over two sprints, starting with non-critical APIs. The results showed a 29% reduction in compute costs and 18% lower average service response times.

Data Processing at Scale (Batch Jobs and Big Data)

Workloads such as image processing, ETL pipelines, and other compute-heavy batch jobs achieve remarkable gains with AWS Graviton. These tasks often run on a schedule or as part of automated data workflows, and involve CPU-bound operations that scale effectively across cores.

Large-scale data processing workloads, especially those running on Apache Spark or Hadoop, perform exceptionally on Graviton. Whether using managed services like Amazon EMR or self-hosted clusters, teams migrating to Graviton-based instances experience immediate improvements in both performance and cost efficiency.

Understanding Scale in Processing

In production environments, teams running batch jobs on Graviton-based instances have observed:

- 25–35% lower compute costs for CPU-bound workloads

- Faster job completion times, reducing pipeline latency and cost per run

- Improved predictability in job runtimes, aiding in scheduling and capacity planning

Production Pipeline Transformations

One media company running a large-scale image optimization pipeline moved from m5.large to m6g.large instances. The workload included resizing, converting, and compressing millions of images daily. The results were immediate: 28% reduction in compute costs, 15% faster processing time per image, and over $12,000 in monthly savings on a single pipeline.

A retail analytics company running daily sales reporting pipelines migrated from r5.xlarge to r7g.xlarge on EMR. Their Spark jobs performed joins, aggregations, and historical comparisons across millions of records per day. After switching to Graviton, the team achieved a 32% drop in data processing costs and 20% faster completion times, freeing up budget and compute capacity to add new analytical jobs without provisioning new resources.

CI/CD Build Runners and Automation Agents

Build systems and automation pipelines is another high-impact area where migrating to AWS Graviton delivers immediate results. CI/CD workloads like compiling code, running tests, building containers, and executing deployment tasks are often CPU-bound and time-sensitive, making them a strong fit for Graviton’s core architecture.

Accelerating Development Workflows

Teams using Jenkins, GitHub Actions, or custom runners typically report faster build and test cycles on Graviton-based instances. Projects with large codebases or parallelized test suites benefit most, as Graviton handles multi-core compilation and concurrent processes with better throughput and resource efficiency.

Graviton adoption in CI pipelines not only accelerates feedback loops for developers but also lowers the cost of running high-volume builds. This dual benefit makes it especially compelling for organizations running multiple builds per hour across multiple branches or microservices.

In real deployments, teams migrating CI/CD workloads to Graviton have seen:

- 10–25% faster build and test times, reducing developer wait time and pipeline duration

- 20–30% lower cost per build, combining cheaper instance pricing with faster execution

- Improved pipeline reliability, with more consistent runtimes and fewer performance-related build failures

Engineering Team Success Stories

One engineering team managing a fleet of Jenkins agents migrated from c5.large to c7g.large instances. After the switch, they saw build times drop by 18% on average, enabling them to process more builds per hour with fewer instances. Combined with the lower hourly rate, the move resulted in a 32% cost reduction for their build infrastructure, without any degradation in developer experience.

For teams managing high-frequency builds, complex test pipelines, or large container builds, Graviton offers a quick way to speed up delivery cycles and lower CI/CD costs at scale.

Data-Intensive Applications on Graviton

Memory-heavy workloads such as MySQL, PostgreSQL, and Redis excel on AWS Graviton, particularly when performance and cost optimization are both critical. These services often form the backbone of production systems, and improvements at the database layer can deliver significant impact across entire applications.

Optimizing Database Performance and Cost

Graviton's architecture delivers superior memory bandwidth and lower latency, which directly enhances database engines. Operations such as buffer pool reads (how databases manage memory), caching, query execution, and connection management all experience measurable improvements, especially under high concurrency or large dataset loads.

Teams running microservices on Graviton-based compute frequently report:

- 20–35% lower cost per transaction due to better CPU efficiency and reduced resource overhead

- Improved pod/container density, allowing more services to run on the same node

- Better internal service communication, with lower latency and improved network throughput

Auto-scaling groups built on Graviton scale efficiently under variable load, while stateless service design enables safe, piecemeal rollout of ARM-based containers behind shared load balancers. Monitoring and observability tools like Prometheus, CloudWatch, and OpenTelemetry continue to work without modification and often highlight better response times and lower CPU usage.

Production Database Success Stories

A SaaS platform migrated several PostgreSQL databases powering its analytics and reporting features from r5.large to r7g.large instances. The migration delivered a 22% improvement in query throughput, 30% lower CPU utilization, and a 26% reduction in overall database cost.

Serverless Functions with AWS Lambda

For teams building with serverless architectures, AWS Lambda offers built-in support for Graviton through ARM-based function execution. These functions deliver the same performance or better than x86-based versions while reducing cost per invocation.

Serverless Cost Optimization

Teams using Graviton-based Lambda functions consistently achieve 15–25% lower invocation costs, especially for compute-heavy or high-volume workloads, experience improved cold start performance, reducing latency in latency-sensitive paths, and gain better concurrency handling, especially for batch events or fan-out processing from SQS (Simple Queue Service), SNS (Simple Notification Service), or EventBridge (event routing service).

Event-Driven Architecture Success Stories

One financial services company running transaction processing functions migrated its Lambda workloads from x86 to ARM. These Python-based functions handled payment event ingestion and fraud detection. The migration analysis showed a 22% drop in invocation costs and an 18% improvement in average execution time, resulting in over $8,000 in monthly savings with no change in logic or architecture.

ML Inference with CPU-Based Models

Not all machine learning inference workloads require GPU acceleration. Many production use cases like text classification, recommendations, and basic predictive APIs can run efficiently on CPUs, and Graviton provides a strong cost-performance advantage for these scenarios.

Scaling AI Workloads Cost-Effectively

Models built with frameworks like scikit-learn, XGBoost, or even lightweight neural networks often benefit from Graviton’s higher memory bandwidth and improved CPU efficiency. Model loading, feature processing, and inference calls perform well, especially when handling moderate traffic or running behind scalable APIs.

Teams using Graviton for CPU-based inference workloads typically see:

- 25–35% lower inference costs compared to x86-based instances

- Faster model initialization and feature processing, especially for text and tabular data

- More cost-effective scaling, enabling broader experimentation without overspending

AI Production Workload Results

In one example, a media company migrated its content classification service from c6i.large to c7g.large. The service used pre-trained scikit-learn and XGBoost models to tag incoming content for downstream workflows. After testing on ARM-compatible containers, the team completed the migration in under a week.

Post-migration results showed a 31% reduction in compute costs, along with faster model response times, which allowed the service to handle traffic spikes with fewer instances.

Content Delivery and Edge Services

Edge computing often relies on fast, efficient services like reverse proxies, content caching, and media optimization. Graviton-based instances offer superior performance for these workloads while significantly lowering infrastructure costs.

Optimizing Global Content Distribution

Workloads such as CDN (Content Delivery Network) origin servers, load balancers, and edge cache nodes capitalize on Graviton's improved network performance and better memory throughput. Teams often notice more efficient handling of concurrent connections, reduced CPU overhead, and smoother traffic bursts during peak usage periods.

Teams running Spark and Hadoop on Graviton instances report:

- 25–40% lower processing costs, driven by both reduced runtime and lower instance pricing

- Faster job completion, especially for memory-bound or shuffle-intensive operations

- More efficient resource scaling, allowing larger datasets to be processed within the same cost envelope

Global Platform Transformation

A digital media company operating a global content platform migrated its edge proxy layer to Graviton-based containers. The transformation resulted in a 27% reduction in monthly infrastructure spend and consistently faster page load times for end-users worldwide.

Managing Migration Risks

Even with strong benefits, successful migrations depend on planning and careful execution. Teams that migrate to Graviton most effectively tend to follow a few common risk-management practices:

Rollback Planning

Most teams set up parallel environments, running both x86 and ARM architectures simultaneously during transition periods. This ensures fast rollback if unexpected issues arise.

Compatibility Validation

While compatibility is a top concern, over 95% of modern applications (especially those built with common frameworks) run on Graviton with little or no modification. Still, it's best to test dependencies in staging before production rollout.

Performance Monitoring

Implementing robust monitoring before and during migration helps teams measure real impact and spot regressions early. This enables data-driven go/no-go decisions.

Gradual, Low-Risk Rollout

The most successful teams start with non-critical or internal services, build confidence through early wins, and then expand to customer-facing or high-impact workloads.

Making the Transition to AWS Graviton: From Idea to Implementation

Shifting to AWS Graviton isn’t just a way to reduce cloud bills; it’s a long-term strategy for building faster, more efficient infrastructure. Like any technology shift, the key to success is starting in the right place, testing intentionally, and measuring what matters.

Where Should You Begin?

Start by identifying workloads that are a natural fit for Graviton. You don’t need to migrate everything at once, nor should you. In most environments, there are a few early prospects where even a small shift can lead to meaningful savings.

Here’s what to look for:

- Applications using common languages like Python, Node.js, Java, or Go

- Stateless, containerized services already running on ECS, EKS, or Lambda

- Compute-heavy workloads: request processing, analytics, batch jobs

- Systems with predictable usage patterns, which simplify benchmarking

In simpler terms: If a service runs on a modern stack, isn't tightly coupled to x86-specific features, and feels expensive, it's worth testing on Graviton.

Good News: AWS Already Did Most of the Hard Work

One of the key advantages of Graviton today is that you don’t need to rebuild your stack from scratch. AWS has spent the last few years ensuring that Graviton works seamlessly across nearly all of its core services.

For example:

- EC2 now offers a wide range of Graviton instance types, from general-purpose to compute-optimized

- Lambda lets you switch to ARM architecture with just a config change

- ECS and EKS support ARM-based containers, with no change to your deployment pipeline

- RDS now provides Graviton-powered instances for MySQL, PostgreSQL, MariaDB, and more

And most modern observability tools (like Datadog, New Relic, Prometheus) work with ARM-based instances out of the box.

How to Know It’s Working: Test, Don’t Guess

Instead of waiting for a complex migration plan, teams are finding success by running simple A/B tests between their current infrastructure and a Graviton alternative.

The approach is straightforward:

Pick a service. Run it on both x86 (like an Intel/AMD c6i) and Graviton (c7g). Compare.

You're not looking for perfection, you're looking for clear signals:

- Did the performance improve or stay the same?

- Did your cost per request/job/user drop?

- Were there any compatibility surprises?

Many teams realize the benefits are evident after only a few hours of conducting side-by-side tests. Graviton lets teams accomplish more with fewer resources, leading to fewer instances to manage and greater long-term savings.