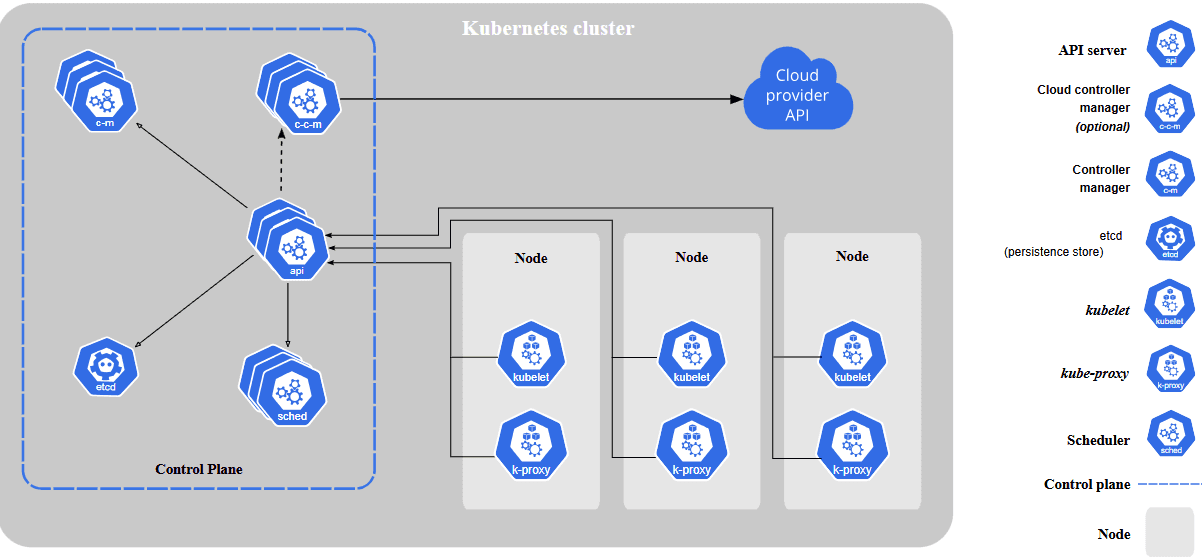

For nearly a decade, Kubernetes has been the cornerstone of container orchestration. It brought order to container chaos, offered powerful APIs, and became the backbone of cloud-native deployments across industries. But as the cloud landscape continues to evolve, so too must our tools and thinking.

Kubernetes solved many problems, but it also introduced new complexity. And today, teams are increasingly asking: Is Kubernetes still the best tool for every job?

This blog explores what’s emerging beyond Kubernetes, from serverless to platform engineering, and helps you understand when and why you might consider alternatives.

Limitations of Kubernetes in Modern Cloud Environments

Source: Kubernetes

Kubernetes is widely used for container orchestration, but it has some important limitations that affect how well it fits modern cloud environments. Recognizing these limitations helps teams decide if Kubernetes is the right choice for their needs.

- When Simple Deployments Become Complex Operations

Your development team wants to deploy a simple web application. With Kubernetes, they first need to navigate clusters, control planes, networking plugins, ingress controllers, and storage systems. Each component requires setup and coordination. A deployment that should take 10 minutes becomes a multi-hour debugging session involving YAML configurations, namespace management, and cluster readiness checks.

This operational complexity increases resource use, both in computing power and management time. The complexity often leads to configuration mistakes that affect stability and increase costs. Teams find themselves spending more time managing infrastructure than building features.

- The Skills Gap Challenge

It takes certain skills to use Kubernetes efficiently, and many teams find it difficult to acquire. Even routine tasks like upgrading clusters or troubleshooting issues demand deep platform understanding. A mid-sized software company discovered that 40% of their engineering time was spent on Kubernetes maintenance rather than product development. They eventually dedicated three full-time engineers just to manage their Kubernetes environment.

This maintenance burden takes time and effort away from building features and slows down overall progress. The learning curve means teams either invest heavily in training or hire specialized talent, both of which can be expensive and time-consuming.

- Developer Experience Bottlenecks

Kubernetes focuses on controlling infrastructure, but this creates friction for developers. Instead of concentrating on writing code, developers must manage complex configuration files, set permissions, and handle platform details. A frontend developer at a financial services company spent three days trying to deploy a simple API endpoint. Between writing deployment configs, setting up ingress rules, and debugging networking issues, no time remained for actual development.

This adds extra steps and increases the chance of mistakes, which slows down deployment and reduces productivity. The gap between developer needs and Kubernetes complexity has become a major pain point for many organizations.

Modern Applications Demand Different Solutions

Cloud-native applications today are very different from what they were just a few years ago. They are designed to be faster, simpler, and more flexible. Because of this, the way we manage and deploy these applications is also changing. The orchestration tools that worked well before don’t always meet the needs of modern workloads.

- Simpler and Faster Delivery Pipelines Are Essential

Modern teams expect code to move from version control to production quickly, often in minutes. They prioritize fast, automated delivery pipelines that avoid unnecessary infrastructure layers. With Kubernetes, each deployment can involve writing YAML files, handling RBAC permissions, managing namespaces, and ensuring cluster readiness. These steps create friction that slows down delivery.

As a result, teams are adopting platforms that offer:

- Pre-configured build and deploy workflows without manual setup

- Automatic scaling and routing without custom resource definitions

- Direct integration with CI/CD pipelines, reducing the need for custom scripts

A media streaming company migrated from Kubernetes to Google Cloud Run and reduced its deployment time from 45 minutes to 3 minutes. Their development team could now push features multiple times per day instead of once per week.

The goal is not just deployment speed, but reducing the number of platform decisions developers must make to reach production.

- Event-Driven Workloads Need Different Orchestration

Many workloads today are built around short-lived, stateless processes triggered by events such as background jobs, webhooks, or processing queues. These applications do not need persistent infrastructure or complex container scheduling. Kubernetes can support them, but doing so often means managing idle capacity, handling cold starts, and maintaining controllers that monitor queue states.

In contrast, platforms built for event-driven workloads:

- Automatically scale down to zero with no configuration

- Handle execution per request or event without needing pods or deployments

- Reduce idle compute cost for non-continuous workloads

An e-commerce platform found that 70% of their workloads were event-driven functions that ran for less than 30 seconds. Maintaining Kubernetes clusters for these workloads meant paying for idle capacity 95% of the time. After moving to AWS Lambda, they reduced their infrastructure costs by 60% while improving response times.

For many of these use cases, the complexity of Kubernetes provides little value and introduces operational overhead.

- Cost Efficiency, Scale, and Visibility Are Top Priorities

Teams are now expected to optimize for both performance and cost. Kubernetes supports autoscaling and observability, but these usually rely on third-party tools such as Prometheus, Grafana, OpenTelemetry, or custom autoscalers. Integrating and managing these tools adds a maintenance burden and increases failure points.

Modern orchestration platforms are beginning to offer:

- Built-in usage metrics and logging, without requiring separate tools

- Transparent billing tied to specific workloads, improving cost visibility

- Fine-grained autoscaling based on concurrency, request volume, or custom metrics

A financial technology company discovered they were spending 30% of their engineering time managing, monitoring, and logging tools around Kubernetes. After switching to managed platforms with built-in observability, they redirected that time to feature development and improved their time-to-market by 40%.

By offering these features natively, newer platforms allow organizations to shift from maintaining infrastructure to optimizing outcomes.

Emerging Models of Container Orchestration

As modern applications move toward faster delivery, lighter workloads, and more automation, the traditional Kubernetes-based orchestration model is being re-evaluated. Several new approaches have emerged that shift the focus from infrastructure management to application delivery and developer productivity.

These models represent a fundamental shift in priorities: less operational burden, faster deployment cycles, better cost control, and native support for event-driven and stateless workloads.

- Serverless and Function-Based Execution

Serverless platforms like AWS Lambda, Google Cloud Functions, and Azure Functions allow teams to run code in response to specific events such as an HTTP request, a file upload, or a message in a queue, without managing any infrastructure.

This model is especially useful for:

- Short-lived, stateless tasks like image processing, API endpoints, or background jobs

- Highly variable workloads that benefit from automatic scaling to zero when idle

- Reducing operational effort, since the platform handles provisioning, scaling, and availability

A digital publishing company moved its image processing pipeline from Kubernetes to AWS Lambda. They eliminated the need for managing worker nodes and reduced their processing costs by 50% while improving processing speed by 30%.

For teams who only need to run small, focused units of logic and don’t want to manage servers or containers, function-based execution offers a clear alternative to Kubernetes.

- Managed Container Runtimes and Zero-Ops Platforms

For applications that still require containers but not the complexity of managing clusters, managed container runtimes are gaining significant adoption. Services such as AWS App Runner, Azure Container Apps, and Google Cloud Run allow developers to deploy standard container images without dealing with infrastructure setup.

These platforms provide:

- Automatic scaling based on HTTP traffic or background events

- Integrated networking and observability without manual configuration

- Built-in security and isolation with minimal customization

- Simplified deployment, often using a single command or a Git-based workflow

A technology startup migrated its microservices from Kubernetes to Google Cloud Run in just two weeks. Their deployment process went from a complex 20-step procedure to a simple git push, and their infrastructure costs dropped by 40%.

The result is a container experience that supports custom runtimes and frameworks while significantly reducing the need for Kubernetes expertise. This makes them ideal for teams that want more flexibility than serverless functions but less complexity than Kubernetes.

- Decoupled Control Planes and Policy-Driven Automation

Traditional Kubernetes environments tightly couple the control plane (which schedules and manages workloads) with the compute environment where workloads run. Newer platforms are separating these responsibilities to create modular, policy-driven orchestration systems.

Examples include tools like Crossplane, KubeVela, and platform engineering approaches built on GitOps. These enable teams to:

- Apply deployment policies across multiple environments without directly managing clusters

- Use infrastructure-as-code patterns to define workload behavior, dependencies, and policies

- Centralize governance and automation while allowing execution to happen wherever it makes sense - on-prem, across cloud regions, or in hybrid environments

This separation is particularly useful in large organizations that need consistent governance, multi-cloud flexibility, and compliance enforcement without locking into a single orchestrator.

Emerging orchestration models are not trying to replace Kubernetes in every scenario. Instead, they offer targeted solutions that reduce complexity, improve efficiency, and better match the architecture of modern applications.

| Use Case | Best Fit |

| Stateless APIs, background jobs, event triggers | Serverless (e.g., AWS Lambda, GCF) |

| Web apps and microservices in containers | Managed runtimes (e.g., Cloud Run, App Runner) |

| Multi-environment policy control and abstraction | Decoupled control planes (e.g,. Crossplane) |

Leading Alternatives and Next-Generation Platforms

Beyond new models of orchestration, there is now a growing ecosystem of tools that offer specific, production-ready alternatives to Kubernetes. These platforms are not theoretical concepts or gradual improvements; they are mature, supported services and frameworks that bring clarity and focus to cloud-native operations. Each one takes a different approach to reducing complexity, improving speed, and aligning with modern application demands.

Serverless Container Platforms

Google Cloud Run, AWS App Runner, and Azure Container Apps represent a new category of container services where operational control is intentionally minimized. These platforms provide a clear path for teams that want to run containerized applications without managing clusters or writing orchestration logic.

Key strengths include:

- Automatic HTTPS, IAM, and autoscaling without manual configuration

- Support for any language or framework through Docker images

- Event-driven capabilities using Pub/Sub, queues, or HTTP triggers

A video processing company migrated from Kubernetes to Google Cloud Run and achieved automatic HTTPS, IAM, and autoscaling without manual configuration, support for their existing Docker images and frameworks, and event-driven capabilities using Pub/Sub triggers. They reduced their operational overhead by 70% and improved deployment speed by 80%.

A startup company moved its web services from Kubernetes to AWS App Runner. They eliminated the need for cluster management while maintaining full control over their application containers. Their deployment process was simplified from managing multiple YAML files to a single command, and their infrastructure costs decreased by 45%.

These platforms are particularly effective for web services, APIs, and backend tasks where predictable scaling and fast deployment are more valuable than fine-grained infrastructure control.

HashiCorp Nomad

Nomad offers a unified scheduler that can run containers, virtual machines, and standalone binaries. It stands out for its simplicity and flexibility across infrastructure types, including data centers, edge environments, and hybrid cloud.

Why it matters:

- Single binary deployment with a small resource footprint

- Support for non-container workloads, including legacy applications

- Smooth integration with Consul (networking) and Vault (secrets) for a complete operational stack

A multinational corporation chose Nomad for its ability to run containers, virtual machines, and standalone binaries through a single scheduler. They achieved single binary deployment with minimal resource usage, support for their legacy applications alongside modern containers, and smooth integration with Consul for networking and Vault for secrets management.

The transition took 3 months compared to their estimated 8-month Kubernetes migration timeline. Their infrastructure team reported that Nomad's simplicity allowed them to focus on applications rather than platform maintenance.

Nomad is well-suited for teams that value operational consistency but don’t want the architectural weight or learning curve of Kubernetes.

Crossplane and KubeVela

While built on Kubernetes, Crossplane and KubeVela shift the focus away from raw orchestration and toward platform engineering. They allow teams to define infrastructure, environments, and deployment workflows as reusable, version-controlled APIs.

Key features include:

- Composable infrastructure definitions using Kubernetes CRDs

- Policy-driven automation for deploying cloud services across AWS, GCP, Azure, and more

- Custom developer platforms that hide underlying complexity from application teams

A technology company implemented Crossplane to create reusable infrastructure definitions across their AWS, GCP, and Azure environments. They achieved composable infrastructure definitions using Kubernetes CRDs, policy-driven automation for deploying cloud services across multiple providers, and custom developer platforms that hide underlying complexity from application teams.

Their platform team could now manage infrastructure for 50+ development teams through standardized APIs, reducing infrastructure setup time from weeks to minutes.

These tools are ideal for organizations adopting internal developer platforms (IDPs) or GitOps workflows, where platform teams own the infrastructure and developers consume it through simple abstractions.

WebAssembly (Wasm) as a Cloud Runtime

WebAssembly (Wasm) introduces a lightweight, fast, and secure runtime environment that challenges the assumption that containers are the only unit of deployment. Projects like Fermyon, Spin, and Wasmtime are enabling a new form of workload execution that is highly portable and ideal for fine-grained, event-driven tasks.

What sets Wasm apart:

- Near-instant startup times, often under 1 millisecond

- Tight resource constraints, suitable for edge devices and IoT

- Language-agnostic support for Rust, C, TinyGo, and more

An IoT company deployed WebAssembly for their edge computing applications and experienced near-instant startup times under 1 millisecond, tight resource constraints suitable for their IoT devices, and language-agnostic support for their mixed development teams.

They processed 10x more events per device compared to their previous container-based approach, while reducing power consumption by 60%.

WebAssembly-based orchestration shows strong potential in areas where traditional containers are too heavy or slow, especially at the edge or in high-density microservice environments.

| Platform | Primary Use Case | Core Strength |

| Google Cloud Run / App Runner | Stateless APIs, containerized apps | No infrastructure to manage, fast auto-scaling |

| HashiCorp Nomad | Mixed workloads (containers, VMs, binaries) | Lightweight, flexible, suitable for hybrid setups |

| Crossplane / KubeVela | Platform engineering, internal platforms | Infrastructure abstraction, policy-driven delivery |

| WebAssembly (Wasm + Fermyon) | Event-driven, edge-native workloads | Ultra-fast startup, minimal resource usage |

Platform Engineering as the New Foundation

Instead of asking "how do we orchestrate containers?", many teams are now asking "how do we make developers more productive?" This shift has led to the emergence of platform engineering as a discipline focused on creating better developer experiences.

Internal Developer Platforms (IDPs)

Internal Developer Platforms provide a structured, self-service interface for application teams to deploy, monitor, and scale services without direct involvement in infrastructure. These platforms abstract away tools such as Kubernetes, Terraform, or CI/CD systems behind clearly defined workflows and guardrails.

An effective IDP typically includes:

- Predefined environments with built-in infrastructure, networking, and observability

- Role-based access and self-service deployment controls

- Clear separation of responsibilities between platform teams and application developers

A large software company built an internal platform that reduced their average deployment time from 2 hours to 5 minutes. Developers can now deploy applications through a simple web interface without needing to understand the underlying Kubernetes complexity. This change freed up 200 hours per week across their engineering teams.

This model allows platform teams to maintain governance while enabling developers to work independently and efficiently.

From Orchestration to Developer Experience

Platform engineering is not just about automating deployment steps, it is about hiding operational complexity behind intentional interfaces. Developers interact with simple service definitions, not Kubernetes manifests or infrastructure code.

Whether the underlying system uses Kubernetes, Cloud Run, Nomad, or a serverless function platform becomes irrelevant to the application team. What matters is:

- Fast, reliable deployments

- Built-in observability and diagnostics

- Consistent environments across stages

By abstracting the orchestration layer, platform engineering reduces cognitive load and accelerates time to production. Teams report that developers can focus on business logic rather than infrastructure concerns.

Integration with GitOps, CI/CD, and Policy Enforcement

Modern platforms also integrate deeply with existing software delivery and governance practices. This includes:

- GitOps workflows that treat environment and application configuration as version-controlled code

- CI/CD pipelines that automate testing, packaging, and release processes

- Policy engines such as Open Policy Agent (OPA) or Kyverno, ensuring security, compliance, and auditability across all deployments

These integrations allow organizations to scale governance without introducing friction or delay in the development process.

Key Considerations for Transitioning Beyond Kubernetes

Kubernetes remains a powerful and mature platform, but it is no longer the automatic choice for every workload or organization. As teams explore alternatives, it is essential to approach the transition with a clear understanding of the trade-offs, requirements, and long-term implications.

- Evaluate Platform Fit for Your Workloads

Choosing the right platform begins with understanding the nature of your applications and delivery patterns. Consider:

- Are your workloads stateless or long-running?

- Do you rely on event-driven triggers or scheduled jobs?

- Are there strict latency, availability, or compliance requirements?

For example, lightweight APIs or background jobs may benefit more from serverless platforms, while Kubernetes or Nomad may best serve stateful systems or complex networking requirements.

Avoid choosing a platform solely based on architectural trends; align the platform’s strengths with your application's operational characteristics and lifecycle expectations.

- Assess Organizational Skills and Ecosystem Readiness

Kubernetes has a well-established ecosystem, with extensive community support, mature tooling, and widely available expertise. Transitioning to a new orchestration model or platform may involve:

- Training teams to use unfamiliar tools or deployment models

- Adapting internal processes, automation, and monitoring systems

- Managing the support gap in less mature or niche platforms

Before adopting an alternative, assess the operational impact on your infrastructure teams, developers, and security stakeholders. A platform is only as effective as the team operating it.

- Analyze Portability, Lock-In, and Compliance Constraints

Serverless and managed platforms provide significant operational simplicity, but often limit flexibility in exchange:

- Workload portability may be reduced if services are tightly integrated with a single cloud provider

- Custom security or data handling requirements may be difficult to enforce in black-box execution environments

- Compliance and audit needs may require visibility or control that these platforms do not natively offer

Before committing to a platform, evaluate whether its architecture supports your long-term strategies around multi-cloud, regulatory compliance, and operational resilience.

Moving beyond Kubernetes does not mean replacing it entirely. It means recognizing that the orchestration landscape is evolving and that different platforms may now offer better alignment with specific workload types, team capabilities, and business priorities.

Rethinking Orchestration: Building Practical Transition Strategies

Not every organization needs to move beyond Kubernetes, but every team should re-evaluate whether it remains the right fit for their current goals.

- If your developers spend more time writing manifests than building features, the platform may be too complex for your needs.

- If small services require weeks of platform setup and integration before they can ship, it's time to consider managed or zero-ops alternatives.

- If you're scaling teams rapidly and onboarding new engineers frequently, developer-friendly platforms can reduce learning curves and increase agility.

Some organizations begin the transition by moving low-risk services to managed container platforms. Others start by abstracting Kubernetes behind internal developer portals that offer self-service deployment. A few replace Kubernetes entirely in greenfield environments, where control is less important than speed and time-to-market.

Transitioning beyond Kubernetes is not a one-time migration — it’s a shift in platform thinking. The goal is not to remove Kubernetes everywhere, but to use it only where it adds clear value.

Gradual Adoption vs. Greenfield Initiatives

Modern teams rarely replace platforms in a single step. A more sustainable strategy is to adopt alternative orchestration models in a phased manner:

- Start with new or experimental services on serverless platforms or zero-ops runtimes

- Maintain critical or stateful workloads on Kubernetes while optimizing developer access through internal tools

- Evaluate hybrid models, where parts of the workload are containerized and others are executed via functions or Wasm

This enables experimentation without destabilizing production systems or retraining entire teams.

Build Abstractions Instead of Rewriting Everything

For many organizations, the immediate challenge is not infrastructure, it’s developer experience. Instead of migrating away from Kubernetes entirely, teams can build abstractions that simplify the developer interaction model:

- Use tools like Backstage to provide unified deployment portals

- Create standardized templates and pipelines that shield developers from infrastructure details

- Introduce internal service catalogs and APIs that offer consistent workflows, regardless of the orchestration backend

The goal is to reduce complexity without discarding the operational maturity that Kubernetes offers.

Migration Path: A Phased Approach to Moving Beyond Kubernetes

Transitioning from Kubernetes to modern orchestration platforms does not require a full system replacement. Many teams adopt a phased strategy that aligns with their architecture, team capacity, and risk tolerance.

- Phase 1 - Planning and Assessment - Begin by identifying application components suited for lighter platforms. Stateless APIs, background workers, and event-driven services are often strong candidates for serverless or managed container runtimes.

- Phase 2 - Pilot Adoption - Migrate low-risk workloads first. These early transitions allow teams to evaluate performance, operational impact, and cost efficiency without affecting critical systems. Many report substantial reductions in complexity and improved deployment times at this stage.

- Phase 3 - Progressive Rollout - Based on pilot success, teams gradually expand adoption. Managed platforms take over routine services, while Kubernetes remains in use for workloads requiring fine-grained control or custom networking.

- Outcomes Observed - Teams that adopt this approach often see measurable benefits, lower infrastructure costs, faster release cycles, and reduced platform maintenance. Engineers previously focused on managing orchestration infrastructure, are able to return focus to product development.

Beyond Kubernetes: Now Is the Time to Redefine Your Platform Strategy

The role of orchestration has changed. It’s no longer about managing clusters; it’s about enabling teams to deliver software faster, with less overhead and more focus on what matters.

Kubernetes helped standardize infrastructure at a critical time, but the landscape has moved forward. Today’s cloud-native applications demand flexibility, simplicity, and a better developer experience. Serverless containers, managed runtimes, and internal platforms are no longer considered alternatives. They’re becoming the default for organizations focused on speed and scale without unnecessary complexity.

This shift isn’t about abandoning Kubernetes. It’s about evolving your approach. Successful teams are not choosing one tool over another; they are building platforms that align with different types of workloads, delivery goals, and organizational needs.

If your current orchestration setup is slowing your teams down, now is the time to reassess. Not everything needs to be re-architected, but the platform your developers rely on should reflect the way modern software is built and operated.

Ready to take the next step?

Start by asking one question:

Is your current platform helping developers move faster, or holding them back?

If it’s the latter, it’s time to rethink what orchestration means for your organization. Begin small: test managed runtimes for new workloads, experiment with developer self-service, and invest in automation that hides unnecessary complexity.

The next phase of platform strategy isn’t just about containers. It’s about control, clarity, and outcomes.

Now is the time to choose the platform that matches your priorities, not your past decisions.