As organizations modernize their IT operations, few trends are as defining as the move toward hybrid and multi-cloud architectures. These models aren’t simply technological upgrades — they’re strategic responses to the growing need for flexibility, control, and regulatory alignment across increasingly complex environments.

Why the Traditional Cloud Model Isn’t Enough Anymore?

While public cloud adoption is widespread, it often meets limitations in real-world enterprise contexts. Organizations are rethinking infrastructure choices due to:

- Data locality requirements – Sensitive workloads tied to region-specific regulations

- Latency-sensitive applications – Operations that require compute power closer to the user or device

- Mixed technology estates – Legacy systems that don’t migrate easily to cloud-native platforms

- Risk mitigation – A need to avoid lock-in and diversify vendor dependencies

Understanding the Architectural Shifts Behind Modern Infrastructure

To understand how providers like Azure, AWS, and Google approach this shift, it’s essential to clarify the foundational models:

Hybrid Cloud: Unifying On-Premises and Cloud Environments

Hybrid cloud addresses the challenge of fragmented infrastructure by enabling organizations to run workloads both on-premises and in the cloud — while maintaining centralized management and policy control.

It’s especially useful when:

- Data residency or compliance rules prevent full cloud migration

- Legacy systems still serve critical business functions

- Businesses want to modernize at their own pace without disruption

Hybrid cloud doesn’t eliminate private infrastructure — it extends its value by integrating it with public cloud agility.

Multi-Cloud: Distributing Workloads Across Providers

Multi-cloud is not just about redundancy — it’s a strategic choice to use multiple cloud providers based on workload requirements, service capabilities, or vendor risk mitigation.

Common drivers include:

- Leveraging unique features from different platforms (e.g., AI from GCP, enterprise tooling from Azure)

- Avoiding lock-in by balancing between providers

- Optimizing cost and performance through geographic distribution

Multi-cloud environments demand a platform-agnostic strategy and tools that abstract or integrate seamlessly across ecosystems.

Edge Computing: Bringing Compute Closer to Where Data Lives

Edge computing reduces latency and offloads central infrastructure by placing compute and storage near the source of data whether that’s a hospital, factory, or retail site.

This model becomes critical for:

- Real-time processing (e.g., manufacturing automation, autonomous vehicles)

- Limited connectivity environments where round-trips to the cloud introduce delay

- Scenarios where data must remain local for regulatory or operational reasons

Edge is less about central orchestration, and more about local autonomy, scale, and responsiveness.

These models aren't mutually exclusive. In fact, Azure Arc, AWS Outposts, and Google Anthos are designed with overlapping goals, but each one prioritizes these models differently, shaping how they support modern infrastructure.

Platform Overviews

AWS Outposts: Cloud Infrastructure Delivered On-Premises

Source - AWS

Outposts brings the full AWS experience — including compute, storage, and services — directly to customer premises using AWS-managed hardware. This allows organizations to build and run AWS-native applications locally, with consistent APIs, tools, and performance across both cloud and edge.

Key Strengths

- Supports EC2, EBS, ECS, EKS, RDS, and more — running on-premises

- Fully managed and monitored by AWS

- Low-latency performance is ideal for edge and regulated industries

- Seamless integration with AWS services like IAM, CloudWatch, and Systems Manager

Compatibility & Integration

Outposts is tightly integrated with the AWS ecosystem and operates as an extension of the AWS Region. It’s best suited for organizations already embedded in AWS, requiring local deployment for compliance, performance, or data sovereignty needs.

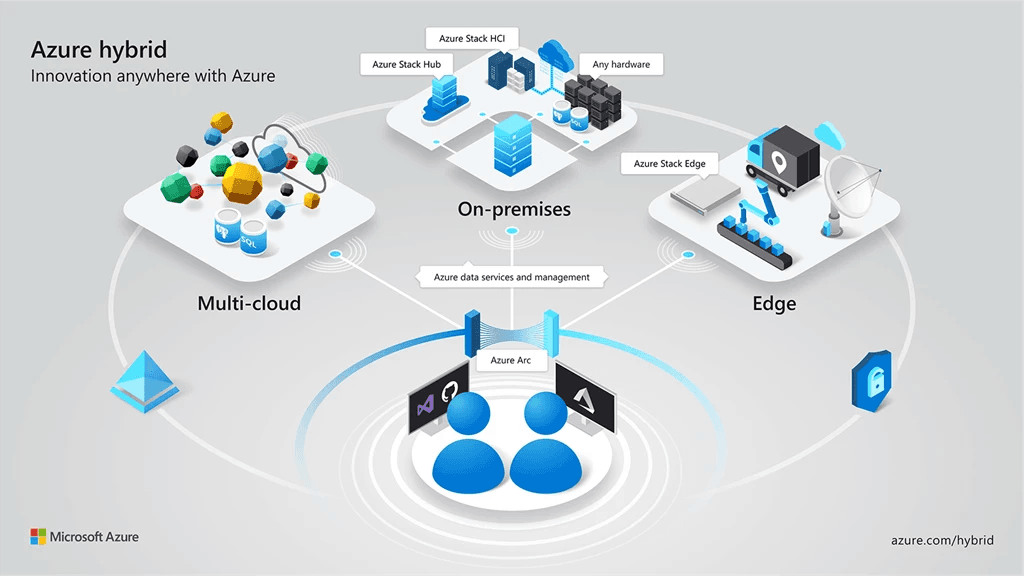

Azure Arc: Extending Azure Beyond the Cloud

Source - Azure

Azure Arc brings Azure’s management, policy, and security capabilities to infrastructure across environments — including on-premises data centers, other clouds, and edge locations. Instead of forcing migration, it projects the Azure control plane onto diverse systems, enabling centralized operations without disrupting existing architecture.

Key Strengths

- Unified governance through Azure Policy, Monitor, and Defender

- Manage Kubernetes clusters, VMs, and SQL servers outside Azure

- Lightweight, agent-based onboarding model

- Supports GitOps and declarative configuration for consistent deployments

Compatibility & Integration

Azure Arc integrates natively with the broader Azure ecosystem, including Azure Active Directory, Azure Security Center, and Azure DevOps. It also supports multi-cloud registration, allowing oversight of AWS or GCP resources under a single operational model.

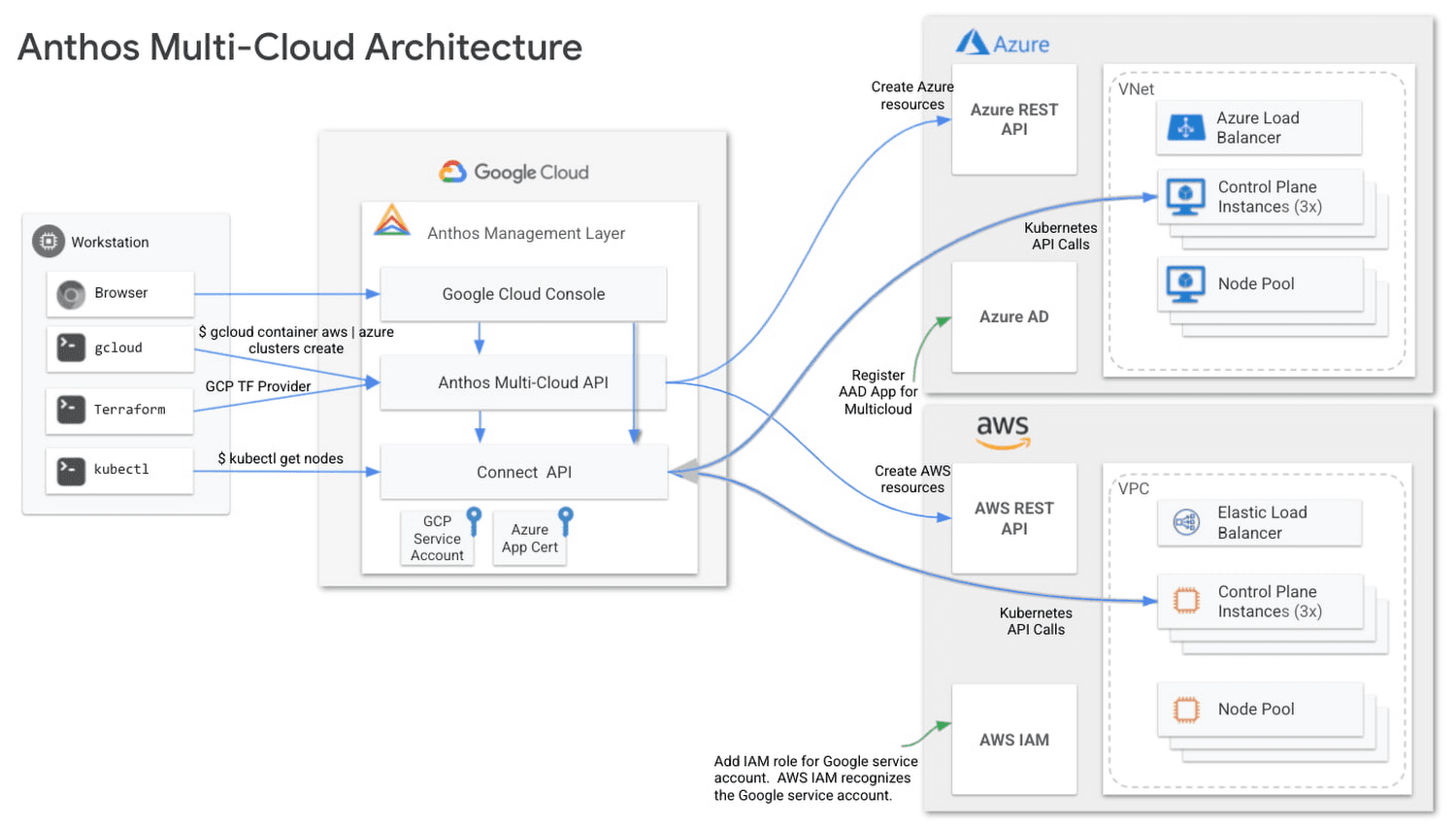

Google Anthos: Application-Centric and Kubernetes-Native

Source - GCP

Anthos delivers a consistent platform for building and managing containerized applications across clouds and on-premises infrastructure. Anchored in Kubernetes and service mesh architecture, it is designed for teams adopting modern DevOps workflows and cloud-native design patterns.

Key Strengths

- Unified management for Kubernetes clusters across GCP, AWS, Azure, and on-prem

- Built-in support for Istio-based service mesh and policy enforcement

- Integrates with CI/CD pipelines and GitOps workflows

- Enables app modernization through abstraction from infrastructure

Compatibility & Integration

Anthos is optimized for Kubernetes-based workloads and integrates closely with GKE, Config Management, and Cloud Operations Suite. While it supports hybrid and multi-cloud, its operational model assumes maturity in containers and declarative infrastructure.

Quick Snapshot: What’s Ahead in the Comparison

Hybrid and multi-cloud platforms vary not just in implementation, but in the strategic intent behind them. As we evaluate AWS Outposts, Azure Arc, and Google Anthos, it’s important to understand what each represents before diving into the details.

| Comparison Area | AWS Outposts | Azure Arc | Google Anthos |

| Core Architecture | Physical AWS hardware on-premises | Azure management extended to any infrastructure | Kubernetes-based platform for multi-cloud deployments |

| Primary Use Case | Low-latency, data residency, AWS-consistent local environment | Centralized governance across diverse resources | Cloud-native app portability and modern DevOps |

| Management Model | Fully managed hardware and AWS APIs locally | Agent-based control plane extending Azure services | Kubernetes clusters with integrated CI/CD pipelines |

| Workload Support | EC2 VMs, EKS Kubernetes clusters | VMs, Kubernetes clusters, databases | Kubernetes-first with VM support via KubeVirt |

| Multi-Cloud Capability | Limited outside AWS ecosystem | Supports multi-cloud resource registration | Designed for true multi-cloud across GCP, AWS, Azure |

| Security & Compliance | Cloud-grade AWS security on-prem | Azure Defender, Sentinel across clouds and on-prem | Zero-trust model with policy automation |

| Scaling & Updates | AWS-managed hardware updates, consistent scaling | API-driven, lightweight agent deployment | Automated upgrades with GKE integration |

| Developer Experience | AWS tools and APIs locally | Azure Resource Manager and policy extensions | Kubernetes-native with service mesh and GitOps |

Let’s take a deeper look into each of these features.

Strategic Approaches to Hybrid and Multi-Cloud

Each of the three platforms—AWS Outposts, Azure Arc, and Google Anthos—represents a unique strategy toward solving the complexities of hybrid and multi-cloud operations. These aren’t just technical tools; they are reflections of how each provider envisions the future of enterprise infrastructure.

Understanding their underlying philosophies is critical before diving into services or features. This section outlines those core ideas, and what they mean in practical terms.

AWS Outposts: Cloud Experience Without Leaving the Data Center

AWS Outposts is built on the idea that the cloud experience shouldn’t end at the data center's edge. It brings the full AWS environment—hardware, services, and operational models—on-premises, aiming to eliminate differences between local and cloud-based infrastructure.

This makes Outposts a compelling solution for organizations that want to stay fully within the AWS ecosystem, but must operate workloads locally due to latency, data residency, or edge-specific requirements.

Key characteristics of AWS Outposts:

- Full replication of AWS services and APIs within the customer’s data center

- Seamless integration with AWS-native tools like CloudWatch and Systems Manager

- Pre-configured hardware, fully managed by AWS, ensuring consistency

- Optimized for low-latency and data-sensitive workloads in industries such as healthcare, media, and manufacturing

Rather than adapting to hybrid complexity, Outposts removes it by narrowing the operating model to just AWS everywhere.

Azure Arc: Centralized Control Across Diverse Environments

Azure Arc takes a control-first approach. Instead of relocating workloads or extending physical infrastructure, Arc projects Azure’s management and policy framework onto resources wherever they live — whether in another cloud, on-premises, or at the edge.

This approach appeals to enterprises navigating operational sprawl across environments, who need to standardize governance and security without overhauling existing infrastructure.

What sets Azure Arc apart:

- Extends Azure Policy, Defender, and Monitor to non-Azure resources

- Centralized governance across VMs, Kubernetes clusters, and databases

- Enables multi-cloud registration, bringing AWS or GCP resources under Azure oversight

- Lightweight, agent-based integration supports gradual adoption without migration

Arc doesn’t reshape workloads; it reshapes visibility and control, allowing enterprises to unify operations across fragmented systems.

Google Anthos: A Platform-Centric View of Multi-Cloud

Anthos is designed for teams that are already building — or ready to build — applications with modern cloud-native practices. It’s less concerned with managing legacy infrastructure and more focused on enabling consistent deployment and operations across any environment, using Kubernetes as the foundation.

Anthos thrives in ecosystems where agility, portability, and developer autonomy are prioritized, even across multiple cloud providers.

Where Google Anthos excels the most:

- Native support for multi-cloud Kubernetes deployments, including AWS and Azure

- Integrated tools for GitOps, CI/CD pipelines, and policy automation

- Emphasis on service mesh (Istio) and configuration management at scale

- Suited for container-first strategies, modernizing existing applications through abstraction

Anthos is not a visibility layer; it’s an application platform. For teams looking to decouple from infrastructure constraints, its architecture provides maximum flexibility, but demands cloud-native maturity.

| Aspect | AWS Outposts | Azure Arc | Google Anthos |

| Core Philosophy | Bring AWS cloud services physically on-premises | Extend Azure management to any environment | Create a consistent app platform across clouds via Kubernetes |

| Primary Goal | Uniformity between cloud and edge | Unified governance and control | Portability and consistency for cloud-native apps |

| Delivery Model | Fully managed AWS hardware in data centers | Lightweight agents register external resources in Azure | Kubernetes-based abstraction layer across environments |

| Best Suited For | Low-latency, data-residency use cases in AWS-centric workloads | Organizations with diverse environments need a centralized policy | Teams adopting DevOps and microservices with multi-cloud flexibility |

| Cloud Dependency | Deeply tied to the AWS ecosystem | Azure-centric but multi-cloud aware | Designed for hybrid/multi-cloud across GCP, AWS, and Azure |

| Operational Complexity | Low (AWS-managed hardware and services) | Moderate (policy-driven, agent-based management) | Higher (requires Kubernetes maturity and open tooling) |

Infrastructure Reach & Architectural Approach

How a platform interacts with your existing infrastructure is more than a technical choice it shapes operational consistency, cost, and scalability.

This section outlines how AWS Outposts, Azure Arc, and Google Anthos are designed to reach across environments, and what that means in real-world scenarios.

AWS Outposts: Bringing AWS Infrastructure to Your Location

AWS Outposts doesn’t adapt to your infrastructure — it replaces part of it. By delivering AWS-owned hardware directly into your data center or co-location facility, it offers a local deployment that is indistinguishable from the AWS cloud in how it behaves and is managed.

This architectural choice allows AWS to maintain full operational control and service fidelity while letting customers meet strict latency or compliance requirements.

Infrastructure characteristics of AWS Outposts:

- Ships with pre-configured racks installed and operated by AWS

- Supports full AWS APIs and services like EC2, RDS, and EKS locally

- Requires stable power, network, and space on the customer’s premises

- Designed for single-cloud consistency, not multi-cloud flexibility

The result is a familiar AWS environment, running next to your applications, but fully managed by AWS down to the firmware.

Azure Arc: Lightweight Agent-based Control Over Any Infrastructure

Azure Arc takes a different path—it reaches into your infrastructure without needing to install any physical Azure hardware. It does so by projecting Azure’s control plane onto your existing systems through lightweight agents and integrations.

Instead of moving workloads into Azure, Arc brings Azure’s policies, security, and automation into whatever environment you already run—whether that’s another cloud, on-prem data centers, or edge sites.

How Azure Arc integrates with infrastructure:

- Uses agent-based connectivity to onboard VMs, Kubernetes clusters, and databases

- No hardware shipping or replacement of your existing infrastructure

- Extends Azure Monitor, Policy, and Defender to registered resources

- Works across on-premises, other clouds, and edge locations

It’s an overlay approach: you keep the infrastructure you trust, and gain the visibility and governance Azure provides.

Google Anthos: Consistent Application Layer Across Environments

Anthos builds its architecture around the idea of application abstraction—not infrastructure replacement. It runs on Kubernetes and focuses on standardizing deployment across environments by treating every location as a managed Kubernetes cluster, no matter where it resides.

Whether running on GCP, AWS, Azure, or on-premises, Anthos builds consistency at the container level. That makes infrastructure differences largely invisible to development and operations teams.

Anthos’ architectural principles:

- Installs Anthos-configured GKE or compatible Kubernetes clusters across environments

- Supports multi-cloud and hybrid deployments without physical hardware

- Built-in support for Istio-based service mesh and config management

- Designed for teams building and scaling microservices across locations

Rather than extending a cloud platform outward, Anthos pulls environments into a common Kubernetes operating model ideal for cloud-native maturity.

| Aspect | AWS Outposts | Azure Arc | Google Anthos |

| Deployment Model | AWS-managed hardware installed on-site | Lightweight agents onboard existing infrastructure | Kubernetes clusters provisioned across environments |

| Infrastructure Control | Fully owned and operated by AWS | Organization retains infrastructure, Azure provides governance | Control lies within Kubernetes-based tooling |

| Hardware Requirement | Requires AWS rack and local setup | No new hardware required | Uses existing or cloud-hosted Kubernetes clusters |

| Reach | Limited to locations AWS can deploy physical equipment | Any cloud, data center, or edge site with connectivity | Multi-cloud and hybrid locations via Kubernetes abstraction |

| Operational Focus | Delivering AWS experience locally | Centralizing governance and visibility | Consistent app deployment and config management |

Application Management & Developer Experience

Managing modern applications is no longer just about where they run—it’s about how consistently teams can deploy, monitor, and iterate across environments. Whether you’re dealing with containers, virtual machines, or both, the ability to deliver and operate applications efficiently is central to hybrid and multi-cloud success.

Below is a look at how each platform supports developers and operations teams across different environments, with a focus on tooling, consistency, and workload flexibility.

AWS Outposts: Full AWS Toolchain Available On-Premises

Outposts brings the entire AWS development experience on-premises, giving teams local access to the same APIs, services, and automation tools used in the AWS cloud. Developers don’t need to adjust their workflows or reconfigure tools to accommodate hybrid deployments.

What it enables:

- Use of native AWS services like EC2, EKS, RDS, and Lambda locally

- Same development, deployment, and CI/CD patterns as in the cloud

- Integration with AWS CloudFormation, CodePipeline, and Systems Manager

- Supports both container (EKS) and VM (EC2) workloads

Outposts is ideal for AWS-native teams that want consistent tooling across both cloud and data center without compromise.

Azure Arc: Centralized Application Management Across Environments

Azure Arc gives development and operations teams a familiar way to manage applications—even if they’re running outside Azure. By projecting Azure Resource Manager (ARM) onto external infrastructure, Arc allows consistent deployment, policy enforcement, and monitoring from a central place.

What it enables:

- Use of Azure services like App Services, Functions, and SQL across environments

- Central management of Kubernetes clusters, VMs, and databases

- Supports Azure DevOps and GitHub Actions for streamlined delivery

- Offers dual support for traditional VMs and Kubernetes containers

Arc suits organizations seeking centralized governance without disrupting legacy systems or developer workflows.

Google Anthos: Cloud-Native App Delivery Using Kubernetes

Anthos is purpose-built for teams already operating in a Kubernetes-driven ecosystem. It provides a tightly integrated platform for deploying, managing, and securing cloud-native applications across multiple environments—using the same declarative, container-first approach.

What it enables:

- Native integration with GKE, Anthos Config Management, and CI/CD pipelines

- Built-in support for Istio-based service mesh and policy automation

- VM support via KubeVirt, though containers remain the primary model

- Emphasis on GitOps, developer autonomy, and platform consistency

Anthos works best for DevOps-centric teams that prioritize agility and need to manage scalable applications across diverse clouds.

| Aspect | AWS Outposts | Azure Arc | Google Anthos |

| Development Model | Native AWS tools and APIs used locally | Azure Resource Manager for external and Azure-based resources | Kubernetes-first with full DevOps integration |

| Container Support | EKS (Kubernetes) on-prem | Azure Kubernetes Service (AKS) and any CNCF-conformant cluster | Anthos GKE, multi-cloud and hybrid |

| VM Support | Full EC2 support | Full VM management via Azure Arc-enabled servers | KubeVirt for VM orchestration inside Kubernetes |

| CI/CD Integration | AWS CodePipeline, CodeBuild, and CloudFormation | Azure DevOps, GitHub Actions | GitOps, Cloud Build, and Anthos Config Management |

Security, Compliance & Identity Management

Security is more than just protecting infrastructure; it’s about aligning with compliance requirements, managing access, and ensuring visibility across environments. Each platform approaches this with its philosophy, shaped by its broader cloud ecosystem.

AWS Outposts: Cloud-Grade Security Delivered On-Site

AWS Outposts extends the mature AWS security model to physical hardware in the data center. For teams that already trust AWS’s security and compliance frameworks, Outposts makes it possible to apply them consistently, even to local workloads.

- Identity managed with AWS IAM, consistent across cloud and on-prem

- Data encrypted at rest and in transit using AWS KMS

- Integrates with AWS CloudTrail, GuardDuty, and Security Hub for threat detection

- Supports major compliance standards (HIPAA, SOC, FedRAMP) out-of-the-box

Azure Arc: Centralized Security Oversight Across Environments

Azure Arc focuses on control and governance. It projects Azure’s security services across all connected resources — whether running in Azure, on-prem, or other clouds. This centralization simplifies compliance and standardizes security operations.

- Extends Microsoft Defender for threat protection on Kubernetes, VMs, and data services

- Works with Azure Sentinel for centralized SIEM and SOAR

- Enables policy enforcement using Azure Policy across hybrid and multi-cloud

- Identity and access managed through Azure Active Directory (AAD)

Google Anthos: Security Designed for Distributed, Modern Architectures

Anthos builds security into the platform from the ground up. Its zero-trust model enforces strong identity and policy controls within and across environments. Rather than retrofitting legacy tools, it relies on cloud-native enforcement designed for containers.

- Service-level identity via Istio for secure service-to-service communication

- Policy management using Anthos Config Management and Gatekeeper

- Integration with Binary Authorization and Shielded GKE Nodes for runtime protection

- Google Cloud’s compliance support extends to Anthos-deployed environments

| Aspect | AWS Outposts | Azure Arc | Google Anthos |

| Security Model | Extends AWS-native security tools on-prem | Centralized control with Azure Defender & Sentinel | Zero-trust, policy-as-code architecture |

| Identity Management | IAM (same as cloud AWS) | Azure Active Directory (RBAC, conditional access) | Workload identity & Kubernetes-native policies |

| Compliance | Supports AWS-regulated standards | Applies Azure policies across environments | Designed for container compliance and isolation |

| Monitoring & Threat Detection | GuardDuty, CloudTrail, Security Hub | Azure Monitor, Sentinel SIEM | Cloud Audit Logs, Binary Authorization, Config Mgmt |

Operational Consistency & Monitoring

In hybrid and multi-cloud environments, operational complexity grows. Consistent monitoring, logging, and configuration enforcement are essential. Each platform addresses this need differently, from tightly integrated toolsets to flexible, open-source-friendly operations.

AWS Outposts: Consistency Through Familiar AWS Tools

AWS Outposts enables teams to operate hybrid systems without learning new tools. All monitoring, logging, and operational workflows mirror what’s already used in the AWS cloud, helping maintain consistency with minimal reconfiguration.

- Monitoring with CloudWatch, covering infrastructure and application metrics

- Resource management through Systems Manager and AWS Config

- Unified logging and alerting via CloudTrail and EventBridge

- Operations aligned with AWS’s shared responsibility model

Azure Arc: Unified Visibility Across All Connected Resources

Azure Arc’s operational model is built on Azure Monitor and Log Analytics, providing insight into workloads wherever they run. By treating external resources as extensions of Azure, Arc centralizes alerts, diagnostics, and performance tracking.

- Monitors VMs, containers, and databases through Azure-native tools

- Logs and metrics are collected into centralized workspaces

- Custom dashboards and proactive alerts are available in Azure Monitor

- Supports change tracking and inventory via Azure Automation

Google Anthos: Declarative Operations and GitOps Automation

Anthos approaches operations with a Kubernetes-first mindset. It emphasizes declarative configuration, automation through GitOps, and built-in observability for distributed applications.

- Monitoring and logging through Cloud Operations Suite (formerly Stackdriver)

- Policy enforcement and config drift detection via Anthos Config Management

- Prometheus and Grafana are supported for container-native metrics

- Git-based workflows for applying and tracking changes at scale

| Aspect | AWS Outposts | Azure Arc | Google Anthos |

| Monitoring Tools | CloudWatch, Systems Manager, AWS Config | Azure Monitor, Log Analytics | Cloud Ops Suite, Prometheus, Config Mgmt |

| Centralized Operations | Yes, within AWS stack | Yes, across multi-cloud and hybrid environments | Yes, Kubernetes-native via GitOps |

| Logging & Alerts | CloudTrail, EventBridge | Unified in Azure Monitor | Integrated via Cloud Logging and Anthos tooling |

| Automation Support | Automation via AWS Systems Manager | Azure Automation, Update Management | GitOps, declarative YAML configurations |

Deployment, Scaling & Maintenance

Efficient deployment and ongoing maintenance are critical to keeping hybrid and multi-cloud environments responsive and manageable. Each platform takes a different route to streamline operations from preconfigured hardware to automated container orchestration.

AWS Outposts: Pre-Built Infrastructure, Managed by AWS

AWS Outposts simplifies the deployment process by delivering a ready-to-use hardware and software stack. Once installed in the data center, AWS handles maintenance, scaling, and updates, ensuring the same reliability and lifecycle management as in the public cloud.

- Delivered as fully managed, rack-mounted hardware

- AWS performs system updates, patching, and scaling operations

- Supports integration with Auto Scaling, Elastic Load Balancing, and other AWS services

- Designed to reduce hands-on maintenance in edge and on-prem environments

Azure Arc: Lightweight Integration, Policy-Driven Management

Azure Arc uses a lightweight, agent-based model to extend Azure’s deployment and automation capabilities to external environments. It’s built for flexibility, letting enterprises scale resources and apply updates using the same policies and automation as native Azure services.

- Agent-based connectivity allows remote resource registration

- Supports GitOps-style and ARM template-based deployments

- Resource scaling and patch management via Azure Policy and Automation

- No need for dedicated hardware, enabling gradual adoption at scale

Google Anthos: Container-Native Scaling with Automated Upgrades

Anthos is designed for environments where automation and elasticity are essential. Built on GKE, it delivers Kubernetes-native scaling, self-healing workloads, and automated version upgrades—ideal for teams embracing DevOps and microservices.

- Auto-scaling based on real-time resource usage

- Rolling updates and in-place upgrades are supported via GKE

- Config sync and policy-driven rollout pipelines

- Works across GCP, AWS, Azure, and on-prem Kubernetes clusters

| Aspect | AWS Outposts | Azure Arc | Google Anthos |

| Deployment Model | Preconfigured physical infrastructure | Agent-based connection to external environments | Kubernetes-native across multiple clouds |

| Maintenance Responsibility | Managed entirely by AWS | Enterprise-controlled via Azure tools | Shared model using GKE automation |

| Scaling Mechanism | AWS Auto Scaling, manual provisioning | Policy-driven scaling via Azure Resource Manager | Auto-scaling with GKE and declarative configuration |

| Update Strategy | AWS-managed system updates | Controlled through Azure Update Management | Automated Kubernetes upgrades |

Pros and Cons

Hybrid and multi-cloud platforms aren’t just about infrastructure—they define how your teams build, manage, and scale. Below is a clearer view into where each platform shines, and where caution or extra planning may be needed.

AWS Outposts

Pros

- Fully integrated AWS environment: Runs the same services, APIs, and tools found in AWS Cloud—on your own premises.

- Low-latency by design: Ideal for workloads that need fast processing close to users or data sources.

- Managed hardware and updates: AWS handles setup, monitoring, and maintenance of the physical infrastructure.

Cons

- Limited to AWS-only: Doesn’t support resources from Azure, GCP, or other clouds—designed for AWS-centric strategies.

- Hardware required: Involves physical deployment, which may not fit agile or fast-changing environments.

- Upfront commitment: Requires purchase and planning cycles uncommon in typical cloud-native workflows.

Azure Arc

Pros

- Cross-cloud governance: Brings Azure tools like Policy, Monitor, and Defender to non-Azure resources.

- Flexible workload support: Works with both containers and virtual machines, across cloud and on-premises.

- Incremental adoption: Uses lightweight agents, making it easier to start without full migration.

Cons

- Azure-first experience: Best suited for teams already using Azure—others may face a steeper learning curve.

- Not developer-centric: More focused on management and compliance than DevOps workflows.

- Limited abstraction: Doesn’t unify cloud platforms, it centralizes control over them.

Google Anthos

Pros

- Built for multi-cloud apps: Manages Kubernetes clusters across AWS, Azure, and GCP from a single control plane.

- Strong CI/CD and config tooling: Includes tools for deployment automation, policy management, and service mesh.

- Open-source foundation: Based on Kubernetes, Istio, and Knative—giving flexibility and avoiding lock-in.

Cons

- Requires Kubernetes expertise: Assumes your team understands containers, clusters, and microservices.

- Less suited for legacy workloads: Doesn’t natively support traditional VMs or monolithic systems.

- Operational overhead: Needs strong platform engineering to deploy, monitor, and maintain effectively.

What Now? Moving from Comparison to Planning

Evaluating Azure Arc, Google Anthos, and AWS Outposts isn’t about finding a one-size-fits-all answer—it’s about identifying the architecture that aligns with how your business runs today and where it needs to evolve.

Before making a decision, consider:

- Where are your workloads today, and where do they need to go?

If your environments span on-prem, cloud, and edge, the right platform should reduce not add to that complexity. - What level of control do you need?

Some platforms offer deep governance, others focus on portability or unified application platforms. What matters more: visibility, consistency, or flexibility? - How cloud-native is your current architecture?

Mature DevOps teams may prioritize container abstraction and multi-cloud CI/CD. Traditional enterprises may look for seamless integration with existing VM-based systems. - What’s your risk tolerance and compliance profile?

Data residency, latency, and sovereignty demands could narrow the field based on regulatory alignment or infrastructure reach.

Rather than selecting based on features alone, treat this comparison as a way to define your hybrid or multi-cloud strategy more intentionally. The next step isn’t choosing a tool it’s clarifying what your architecture should enable.